Choosing Software Development Solutions for Scale

Most teams don’t “fail to scale” because they picked the wrong framework. They fail because they chose software development solutions that don’t match how the business needs to grow: more users, more products, more regions, more integrations, stricter security, faster delivery, and tighter margins.

Scaling is a systems problem. Your software, cloud, data model, release process, security posture, and team structure all need to work together. This guide walks through a pragmatic way to choose software development solutions for scale, without over-optimizing for hype or under-investing in fundamentals.

What “scale” actually means (so you don’t buy the wrong solution)

“Scale” is not just handling more traffic. In practice, it usually includes some mix of:

- Business scale: adding new product lines, monetization models, geographies, partners, or customer segments.

- Team scale: going from a small dev group to multiple squads shipping in parallel.

- Operational scale: higher uptime expectations, on-call rotations, incident response, compliance audits.

- Delivery scale: shipping faster with fewer regressions, and being able to change safely.

Before comparing vendors or architectures, write down the constraints that define scale for you:

- Expected growth (users, transactions, data volume) over 12 to 36 months

- Availability and recovery targets (SLOs, RTO/RPO)

- Security and compliance requirements (SOC 2, ISO 27001, PCI DSS, GDPR, HIPAA, industry regulators)

- Integration needs (ERP/CRM, payment providers, identity, partner APIs)

- Latency and performance expectations by region

- Budget model (capex vs opex), and what “cost of delay” looks like

If you don’t specify these, you’ll default to choosing what looks modern, or what a vendor demo makes easy, rather than what will keep working at 10x load and 3x team size.

The 4 categories of software development solutions (and when each scales best)

When leaders say they’re “choosing a software development solution,” they often mix together very different options. A clearer breakdown helps you evaluate tradeoffs.

1) Off-the-shelf SaaS

Best when the workflow is standard and the competitive advantage is not in software.

SaaS can scale operationally with minimal engineering overhead, but it can limit you when:

- Your process is genuinely differentiated

- You need deep integrations or complex data flows

- You have strict residency, audit, or customization requirements

2) Platforms and vertical solutions (industry-specific)

These can scale faster than pure custom builds because they ship core primitives (admin, analytics, payments, compliance modules, etc.) out of the box.

For example, in regulated and high-throughput industries like iGaming, many teams start with a modular platform instead of building every subsystem from scratch. If you’re evaluating that path, an example of a vertical platform is Spinlab’s all-in-one iGaming platform, designed to help operators launch and scale with integrated payments, compliance, and game aggregation.

The key scaling question is not “can it handle traffic,” it’s “can it evolve with our roadmap without trapping us.”

3) Custom software development (built in-house or with a partner)

Custom is often the right scaling choice when:

- Software is the core differentiator

- You need unique workflows, pricing, risk models, or data products

- You expect high change velocity, and want control of the roadmap

Custom also has the highest responsibility surface area: architecture, delivery process, security, operations, and talent strategy.

4) Hybrid: buy core, build differentiators

This is the most common “scales well in real life” approach.

You might buy:

- Identity (SSO/IAM)

- Billing and payments

- Observability

- CRM/support tooling

And build:

- Your domain model and business logic

- Customer-facing experience

- Differentiated workflows and data products

Hybrid solutions scale well when the integration boundaries are clean and your architecture is designed for change.

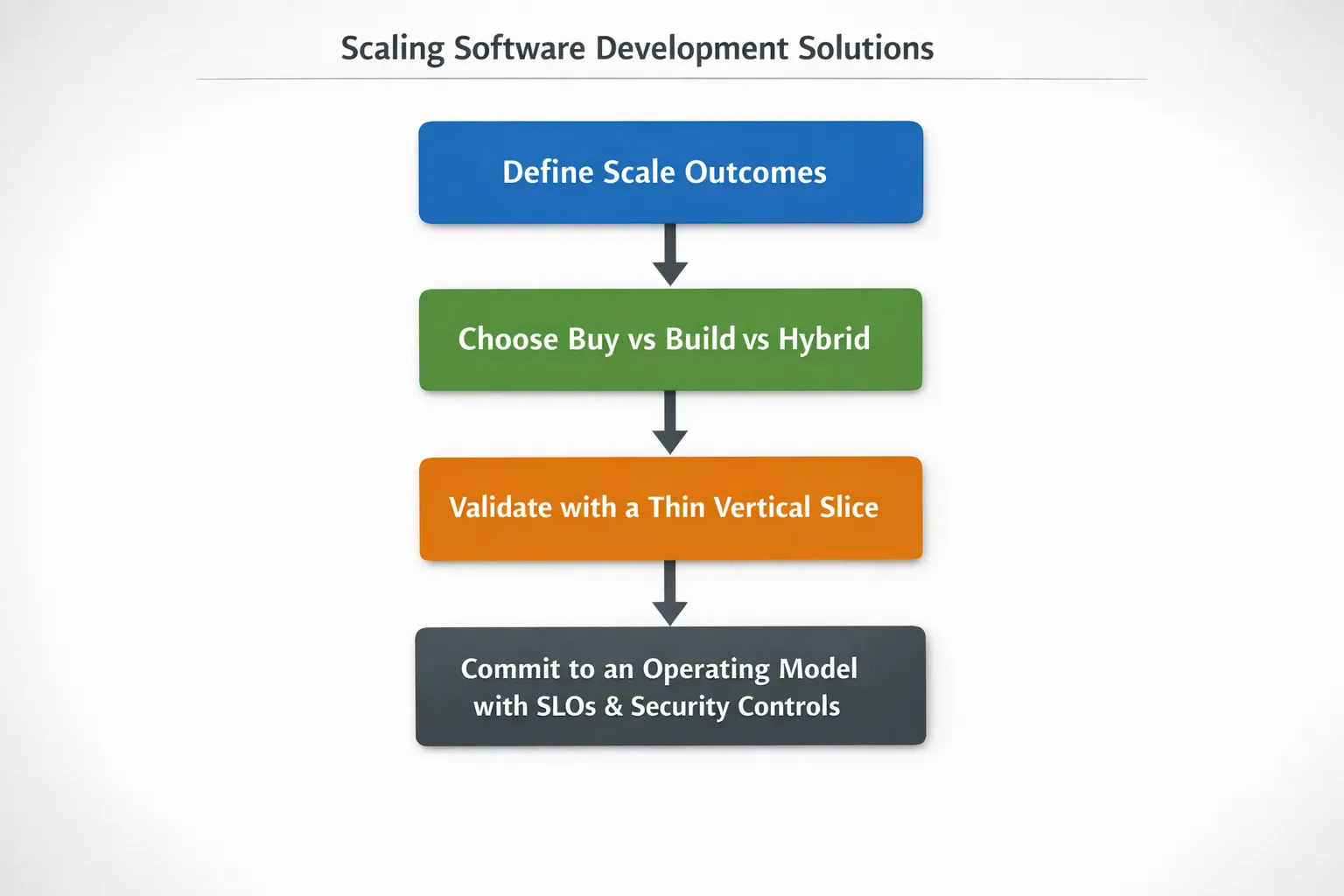

A scaling-first decision framework (beyond tech stack)

To avoid a solution that looks good in a pilot but collapses under growth, evaluate candidates across five dimensions.

1) Scalability of the product, and the organization

Ask:

- Can we add features without rewriting large sections of the system?

- Can multiple teams work without constant merge conflicts and coordination overhead?

- Is there a clear ownership model (services/modules, data domains, on-call)?

This is where “scale” becomes socio-technical: architecture decisions determine how teams communicate.

2) Reliability and operability (your future on-call reality)

Many solutions claim “high availability,” but your team lives in the details:

- Do you have defined SLOs for critical user journeys?

- Can you do zero-downtime deploys (or at least predictable maintenance windows)?

- Do you have observability (metrics, logs, traces) that can answer “why” during incidents?

- Is there a clear incident response process, and do you practice it?

Google’s DORA research consistently links strong delivery and operational capabilities with better outcomes (deployment frequency, lead time, change failure rate, and MTTR). The metrics aren’t just engineering vanity, they are leading indicators of whether your solution will keep scaling without burning out teams.

3) Security and compliance as built-in properties

Scaling usually increases your attack surface: more endpoints, integrations, secrets, roles, and third parties.

A practical baseline is to align with recognized guidance like the NIST Secure Software Development Framework (SSDF), and translate it into day-to-day controls:

- Dependency and supply chain security (SBOMs, signed builds)

- Secrets management and key rotation

- Least-privilege access (human and machine)

- Audit logs and tamper resistance

- Secure defaults in CI/CD

If a vendor or internal proposal cannot clearly explain how security is implemented and evidenced, it will not scale under real regulatory or customer scrutiny.

4) Data architecture and integration readiness

Scaling tends to break systems at the seams:

- Reporting and analytics needs expand

- Integrations proliferate

- Data quality becomes a business risk

Evaluate:

- Data ownership: who owns the source of truth for key entities?

- Integration patterns: events, queues, outbox pattern, contract testing

- Migration strategy: how will you evolve schemas without downtime?

If you anticipate heavy analytics, AI, or personalization, treat data flows as first-class architecture, not an afterthought.

5) Total cost of ownership (TCO), not just build cost

A “cheap” solution can become expensive when scaling adds:

- Cloud costs from inefficient workloads

- Engineering costs from brittle architecture

- Downtime costs from weak reliability practices

- Opportunity costs from slow delivery

When comparing options, model 3 to 5 year costs across:

- Build and integration

- Ongoing ops (on-call, incident response, maintenance)

- Vendor fees (per seat, per transaction, per integration)

- Compliance and audits

- Cost of change (how much effort to ship a meaningful feature?)

A practical scorecard you can use to compare options

A scorecard keeps selection grounded in outcomes, and makes tradeoffs explicit for stakeholders.

| Dimension | What to measure | Questions that reveal scaling risk |

|---|---|---|

| Change velocity | Lead time, deploy frequency, release safety | How do we ship weekly without heroics? What breaks when we add 3 more teams? |

| Reliability | SLOs, incident rate, MTTR | What happens when a dependency fails? How do we detect and recover? |

| Security & compliance | Controls, audit evidence, SDLC maturity | Can we prove controls to auditors and customers? How are secrets and access handled? |

| Performance & data growth | Latency, throughput, data volume plan | What happens at 10x traffic? How do we scale reads, writes, and reporting? |

| Integration & extensibility | API strategy, contracts, upgrade path | Can we add new partners and channels without rewrites? Are boundaries clear? |

| Talent & maintainability | Hiring feasibility, code health, documentation | Can we hire for this stack? Is the codebase readable, tested, and owned? |

| Commercial/TCO | 3 to 5 year cost model, exit costs | What is the cost to change vendors or replatform? Are we locked in? |

You don’t need perfect numbers on day one. You need enough clarity to avoid a decision you will regret after your first growth spike.

How to run the selection process (so you don’t “decide” and then discover reality)

The fastest way to choose software development solutions for scale is to reduce unknowns early with proof, not promises.

Start with a thin vertical slice

Instead of building a broad prototype, build a small but end-to-end slice that includes:

- One critical user journey

- Real authentication and authorization

- A real database schema (not mock data)

- One external integration

- CI/CD to a production-like environment

- Basic observability and error handling

This flushes out the scaling constraints you cannot see in a slide deck: deployment friction, data modeling issues, integration latency, operational complexity, and security gaps.

Validate operability, not just functionality

During the slice, force realistic operational questions:

- How do we roll back?

- How do we run migrations safely?

- What does an incident look like, and who responds?

- How do we do access reviews and audit logging?

If you can’t answer these at slice stage, scaling will be painful.

Decide the “shape” of the system for the next 12 months

Teams often over-invest in premature microservices. A better scaling move is to pick an architecture that supports change without multiplying complexity.

For many products, a well-structured modular system (clear boundaries, clean interfaces, strong testing, good DevOps) scales further than people expect. Then you split out services when you have proven reasons: independent scaling needs, fault isolation, team autonomy, or regulatory boundaries.

Common scaling traps when choosing solutions

Trap 1: Optimizing for speed of initial build

Speed matters, but the wrong solution can create a long-term tax on every release. If your roadmap requires high iteration, choose what makes change safe.

Trap 2: Confusing “cloud-ready” with “operable”

A solution can run on Kubernetes and still be hard to operate. Operability comes from SLOs, dashboards, alerting discipline, runbooks, and reliable deployment patterns.

Trap 3: Treating security as a phase

Scaling usually means more audits, more partners, and more data. If security is bolted on later, you will re-architect under pressure.

Trap 4: Vendor lock-in by accident

Lock-in is sometimes a rational choice, but it should be explicit. Always ask:

- What is our exit plan?

- Can we export data cleanly?

- Are integrations proprietary?

- What breaks if pricing changes?

What “good” looks like at different stages of scale

| Stage | Typical reality | What to put in place now |

|---|---|---|

| Early growth | Small team, fast changes, evolving product | Clear domain model, basic CI/CD, test strategy, sane architecture boundaries |

| Scaling team | Multiple squads, parallel work, more integrations | Ownership model, observability, release safety (feature flags), API governance |

| Enterprise/regulated | Audits, vendor risk, higher uptime | Secure SDLC, audit trails, SLOs, incident response, compliance evidence automation |

If you plan for the next stage without prematurely building for the final stage, you get the best of both: speed now, and fewer rewrites later.

Frequently Asked Questions

What are “software development solutions” in the context of scaling? Software development solutions can include custom development, SaaS tools, industry platforms, and hybrid approaches (buying commodity capabilities while building differentiated product logic). For scale, the best solution is the one that supports growth in users, features, and teams without exploding operational and maintenance costs.

Is custom software always better for scalability? Not always. Custom software gives control and differentiation, but it also requires strong delivery, security, and operations capabilities. Many organizations scale faster with a hybrid approach, buying proven components (identity, payments, observability) and building what differentiates them.

When should we move from a monolith to microservices for scale? When you have clear, measured reasons like independent scaling requirements, fault isolation needs, or team autonomy constraints that cannot be solved with modular boundaries inside a single codebase. Microservices can improve scale, but they also add operational complexity that can slow teams down.

How can we evaluate whether a vendor solution will scale with us? Ask for evidence: uptime history, security controls and audit artifacts, integration patterns, data export and exit strategy, and real operating requirements (on-call expectations, incident handling, change management). A thin vertical slice integration is often the fastest way to validate claims.

What metrics indicate we are scaling safely? DORA metrics (lead time, deploy frequency, change failure rate, MTTR) are strong indicators of delivery health. Pair them with SLO attainment for critical user journeys and security metrics (patch cadence, dependency risk, audit findings) to get a balanced view.

Build for growth without betting the company on one big decision

Choosing software development solutions for scale is ultimately about de-risking growth. The goal is not to find a perfect stack or a perfect vendor, it’s to select an approach you can operate, secure, evolve, and staff as the business expands.

If you want a second set of eyes on your options, Wolf-Tech helps teams plan, build, and scale custom software with strong engineering fundamentals, cloud and DevOps expertise, and pragmatic modernization strategies. Explore Wolf-Tech at wolf-tech.io and reach out when you’re ready to validate an architecture, run a thin-slice pilot, or design a scaling roadmap that fits your real constraints.