Top Traits of Web Application Development Companies

Choosing the right web application development company is a high‑stakes decision. The partner you select will influence your speed to market, security posture, user experience, and the total cost of ownership for years. This guide distills the top traits that consistently show up in high performing vendors, plus practical ways to verify them during your evaluation.

Top traits of web application development companies

1) Product mindset, not just project delivery

Great vendors align code with business outcomes. They run structured discovery, clarify assumptions, and translate goals into measurable hypotheses. Expect user journey mapping, lightweight prototypes, and a backlog tied to outcomes rather than output.

How to verify: ask to see discovery artifacts from past work, the cadence for usability testing, and how feature ideas are validated or killed.

2) Engineering fundamentals that scale quality

Look for clear coding standards, code review discipline, automated tests across layers, and continuous integration. Mature teams balance speed and safety with trunk based development, small pull requests, and repeatable environments. This reduces regressions and accelerates onboarding.

How to verify: ask for a sample standards document, a description of the test pyramid, and typical cycle times for a small change from commit to production.

3) DevOps maturity and measurable delivery performance

High performing teams monitor four key metrics identified by DORA research, lead time for changes, deployment frequency, change failure rate, and time to restore service. Vendors that instrument and improve these see faster and safer releases. See Google’s summary of the research at DORA research.

How to verify: request representative anonymized metrics from recent projects and a description of their rollback strategy and incident drill frequency.

4) Security by design and secure SDLC

Expect threat modeling, secure coding guidelines, secrets management, dependency scanning with SBOMs, and regular SAST and DAST. Processes aligned to the NIST SSDF, SP 800‑218 and awareness of the OWASP Top 10 are strong signals. For API heavy systems, familiarity with the OWASP API Security Top 10 matters.

How to verify: ask to see an example security checklist, how critical CVEs are triaged, and the standard patch windows they commit to in maintenance agreements.

5) Right sized architecture with future change in mind

Top vendors choose architectures for your context, not for fashion. That might be a modular monolith with clean boundaries or a service oriented design where needed. They design for change with domain driven thinking, clear module boundaries, feature toggles, and a path to scale. Principles like the Twelve‑Factor App often guide cloud native design.

How to verify: request an example Architecture Decision Record and the nonfunctional requirements they capture early, latency budgets, performance targets, and availability goals.

6) APIs and data that are reliable and well governed

Expect rigorous API design, versioning and deprecation policy, idempotency for write operations, pagination and rate limiting, and consistent error models. On the data side, good modeling, migration discipline, backup and restore drills, and privacy by design are critical.

How to verify: ask for API style guides, examples of breaking change avoidance, and evidence of tested disaster recovery runbooks.

7) UX and accessibility as first order concerns

Accessible, inclusive interfaces expand your market and reduce legal risk. A strong vendor designs and tests against WCAG 2.2 and includes accessibility checks in CI. They use usability testing to validate flows early.

How to verify: ask for accessibility testing tools and processes, sample reports, and how they involve real users in formative tests.

8) Observability and operations readiness

Modern teams ship with logging, metrics, and distributed tracing from day one, often standardizing on OpenTelemetry. They define SLIs and SLOs with error budgets, and wire alerts to symptoms users feel, not just infrastructure noise.

How to verify: request an example of their observability blueprint, log retention policies, and on call escalation playbooks.

9) Clear project governance and transparent communication

Expect a cadence of demos, risk reviews, and scope control. Great vendors publish a simple, honest status each week, what shipped, what moved, what is blocked, and what changed. You should see a living RAID log and a single accountable engagement lead.

How to verify: ask for sample weekly status reports and a change management approach that avoids unmanaged scope creep.

10) Documentation and knowledge transfer

Sustainable delivery requires docs that a new engineer can use. You want high signal README files, environment setup scripts, ADRs for key decisions, runbooks, and a structured handover plan.

How to verify: ask to see a documentation table of contents from a past project and the agenda for a typical handoff session.

11) Proven cloud and cost awareness

Cloud done well balances performance and spend. Look for infrastructure as code, environment parity, ephemeral preview environments, and proactive cost monitoring with budgets and alerts. Top vendors forecast expected run costs when proposing architectures.

How to verify: request examples of IaC structure and how they prevent drift and lock down permissions with least privilege.

12) Currency with modern tech and practices

Good partners show a considered point of view on what to adopt or avoid. Referencing credible sources like the Thoughtworks Technology Radar and explaining tradeoffs in plain language is a strong indicator.

How to verify: ask for a short write up on why they would pick the suggested stack for your case and what the escape hatch is if needs change.

Questions and proof to request

| Trait | Smart questions to ask | Proof to request |

|---|---|---|

| Product mindset | How do you validate risky assumptions before building? What would make you stop a feature mid sprint? | Sample discovery artifacts, prototype or storyboard, hypothesis backlog |

| Delivery performance | What are your typical lead time and change failure rate for a small web change? | Anonymized DORA style metrics and a recent deployment timeline |

| Security | How do you handle secrets, SBOMs, and third party vulnerabilities? | Secure SDLC checklist, example SAST or DAST report, CVE triage policy |

| Architecture | How do you capture and communicate key decisions? | One or two ADRs and nonfunctional requirement examples |

| APIs and data | How do you version APIs and plan deprecations? | API style guide, changelog with deprecation notices |

| UX and accessibility | How do you test for WCAG 2.2 issues during development? | Accessibility report excerpt and tooling list |

| Observability | What SLIs and SLOs do you recommend for our app type? | Example dashboards and an incident postmortem template |

| Governance | What will I see in your weekly status update? | Sample status, RAID log snippet, risk heatmap |

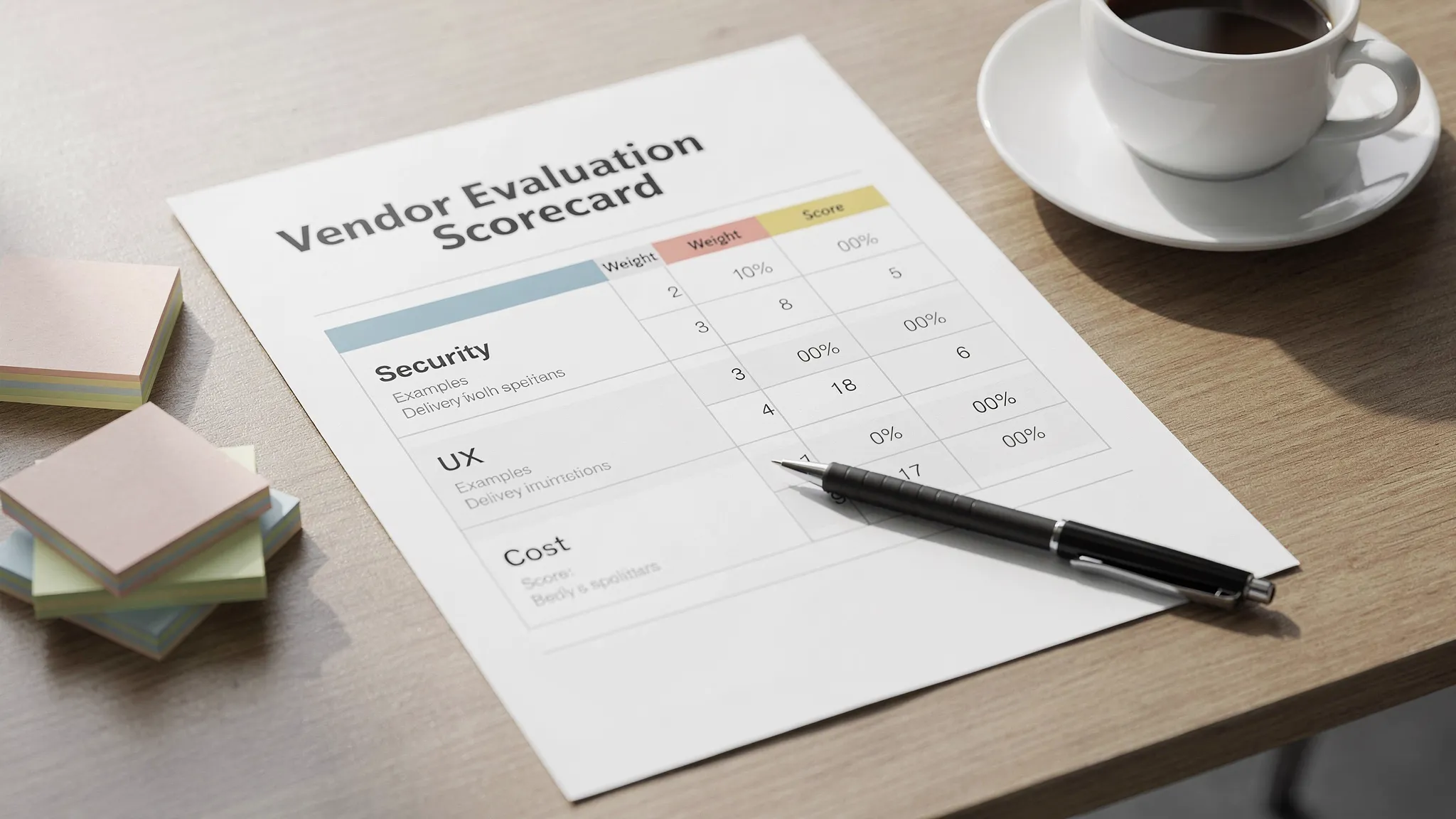

A practical vendor scorecard you can reuse

Use a simple scorecard to compare options side by side. Weight categories for your context and score each vendor from 1 to 5.

| Category | Weight | Notes on what a 5 looks like |

|---|---|---|

| Security and compliance | 20% | Secure SDLC aligned to NIST SSDF, automated testing in CI, rapid CVE response, evidence of supply chain controls |

| Delivery performance | 20% | Demonstrated DORA metrics, trunk based practices, fast rollback and recovery |

| Architecture and scalability | 15% | Context appropriate design, documented decisions, clear NFRs and capacity plan |

| UX and accessibility | 10% | User testing in process, WCAG 2.2 checks, measurable UX outcomes |

| DevOps and cloud | 15% | IaC, environment parity, cost awareness, automated pipelines end to end |

| APIs and data | 10% | Strong API governance, data migrations, backups and drills |

| Governance and communication | 10% | Clear cadence, transparent status, predictable change control |

Total weight should be 100 percent. Tie break with cultural fit, domain experience, and reference feedback.

Red flags that often predict trouble

- Vague answers about process or security, or an inability to show real artifacts.

- Big up front design with no plan to validate with users during development.

- Custom frameworks without clear documentation or a migration path.

- No shared understanding of nonfunctional requirements, performance, accessibility, operability.

- A proposal that ignores ongoing cloud costs, monitoring, or incident response.

- Testing mentioned only at the end, or test coverage framed as a vanity metric without context.

KPIs and SLAs to agree before kickoff

- Delivery KPIs, lead time for changes, deployment frequency, change failure rate, time to restore service.

- Quality KPIs, escaped defect rate, mean time between incidents for critical severity.

- Reliability SLOs, target uptime, error budgets, clear ownership for severity one incidents and response times.

- Security KPIs, vulnerability remediation windows by severity, dependency update cadence, penetration testing schedule.

- Experience metrics, core user flow performance budgets and accessibility issue burndown.

Set the initial targets based on your risk appetite and revisit them quarterly as the product evolves.

How Wolf‑Tech aligns with these traits

Wolf‑Tech specializes in full stack development and consulting that map directly to the traits above, with over 18 years of experience across modern stacks and industries.

- Full stack and web application development: custom software and web apps built with a product mindset, from discovery to delivery.

- Code quality consulting: standards, reviews, and automated testing practices that raise engineering quality.

- Legacy code optimization: modernization paths that reduce risk and cost while improving maintainability and performance.

- Tech stack strategy and digital transformation guidance: pragmatic choices grounded in your business goals and constraints.

- Cloud and DevOps expertise: infrastructure and pipelines that speed delivery while keeping reliability and cost in view.

- Database and API solutions: robust data modeling and API design with governance and security baked in.

- Industry specific digital solutions: context aware implementations aligned with your regulatory and customer needs.

If you are evaluating partners now, a short conversation with Wolf‑Tech can help you benchmark options and clarify the right next step for your roadmap.

Putting it all together

Shortlist vendors that can show their work, the artifacts, metrics, and decision records that prove maturity. Use the questions and scorecard above to bring structure to demos and proposals. The best web application development companies make their process legible, their tradeoffs explicit, and their outcomes measurable. That is how you ship faster, safer, and with confidence in 2025.