Custom Web Application Development: From Idea to Launch

Every breakthrough web app starts with a crisp business outcome and a plan to remove risk step by step. The distance from idea to launch can be short if you validate value early, choose a fit‑for‑purpose architecture, and ship a thin slice to production fast, then iterate with real feedback.

This guide distills nearly two decades of custom delivery experience into a practical blueprint you can apply in 2026, whether you are a startup validating a new product or an enterprise modernizing a mission‑critical workflow.

Before you write a line of code

Great launches begin with ruthless clarity. Capture the essentials on one page so every decision ties back to value.

- Problem and audience: Who has the pain, what alternatives exist, and what must be true for them to switch.

- Outcomes and constraints: Business goals, budget guardrails, timeline windows, data residency, privacy, and regulatory boundaries.

- Success metrics: Leading indicators for learning during MVP (activation, task success, time to value), and guardrails for reliability (SLOs, error budgets).

If you are still debating whether to build at all, run a quick decision pass on build versus buy. Here is a pragmatic framework to help you decide: When to Choose Custom Solutions Over Off‑the‑Shelf.

The idea‑to‑launch playbook

Seven stages keep momentum high while steadily reducing risk. Treat each stage as a gate with proofs you must earn, not a box to tick.

Stage 1: Frame outcomes and non‑functional requirements

Translate strategy into engineering signals your team can use.

- One‑page vision, problem statement, business constraints, and the smallest user journey that realizes value.

- Non‑functional requirements, such as performance budgets, availability targets, data classification, privacy, and auditability.

- Integration inventory and risks, including vendor SLAs, rate limits, and data contracts.

Exit proof: Team can explain in plain language what success looks like, how it will be measured, and the constraints that cannot be violated.

Stage 2: Prove value and usability

Validate that the solution solves a real problem and that users can succeed quickly.

- Lightweight discovery, 5 to 10 targeted customer interviews and journey mapping.

- Click‑through prototype and usability tests that measure task completion and time to value.

- Scope a Minimum Lovable Product that earns the first cohort of users, not a feature catalog.

Exit proof: Evidence that users will adopt the solution, with the smallest set of features needed for production learning.

Stage 3: Choose architecture and tech stack

Pick a baseline that is flexible now and evolvable later.

- Default to a modular monolith for most MVPs, you preserve simplicity while enabling future extraction of services if needed.

- Decide rendering and data strategies per capability, for example server‑rendered for transactional flows, ISR or static for marketing and docs.

- Model data and define API boundaries that mirror business capabilities.

For a structured selection process that weighs trade‑offs against outcomes, use this guide: How to Choose the Right Tech Stack in 2025.

Exit proof: Architectural decision record, a thin end‑to‑end design for the first slice, and agreement on what good looks like for security, performance, and observability.

Stage 4: Plan delivery and seed the team

Set the paved path before sprinting.

- Sprint zero deliverables, versioned repo, trunk‑based development, CI pipeline, preview environments, basic monitoring, and error tracking.

- Definition of Ready and Definition of Done with automated checks, unit tests, contract tests, and accessibility checks.

- Story map into a sequenced backlog that delivers a vertical slice first, then expands capabilities.

Exit proof: Team can ship to a non‑production environment on every merge, with tests and basic telemetry in place.

Stage 5: Build and ship the first vertical slice

Deliver one narrow user journey end to end in production.

- Implement the golden path with authentication, authorization, logging, and audit trails.

- Observe real behavior with product analytics and trace correlations, use the data to refine backlog.

- Set initial SLOs for the slice, availability, latency, and error rate, and on‑call ownership.

Exit proof: A real user can complete the core task in production, and you can measure performance and reliability of that flow.

Stage 6: Expand to MVP

Add only what your first cohort needs to realize recurring value.

- Iterative delivery with feature flags, canary releases, and frequent small merges.

- Hardening for scale appropriate to expected load, caching strategy, rate limiting, and back‑pressure, and cost visibility.

- Security tasks shift left, secrets management, dependency and container scanning, and threat modeling of new capabilities.

Exit proof: The MVP satisfies defined outcomes for a limited audience under expected load with documented runbooks and rollback paths.

Stage 7: Launch readiness and go‑live

Treat launch as an engineering event and a change management event.

- Operational readiness, runbooks, incident playbooks, SLO reviews, chaos drills for failure modes, and capacity and cost checks.

- Data migration and cutover plan with dry runs, checkpoints, and success and rollback criteria.

- Privacy and compliance evidence organized for audit, access reviews, data retention policies, and vendor risk assessments.

If regulatory work is on your critical path, an AI‑powered compliance management platform can accelerate regulatory watch, control mapping, and evidence collection while your team builds product value.

Exit proof: Green light on launch gates, including performance, security, compliance, support readiness, and stakeholder sign‑off.

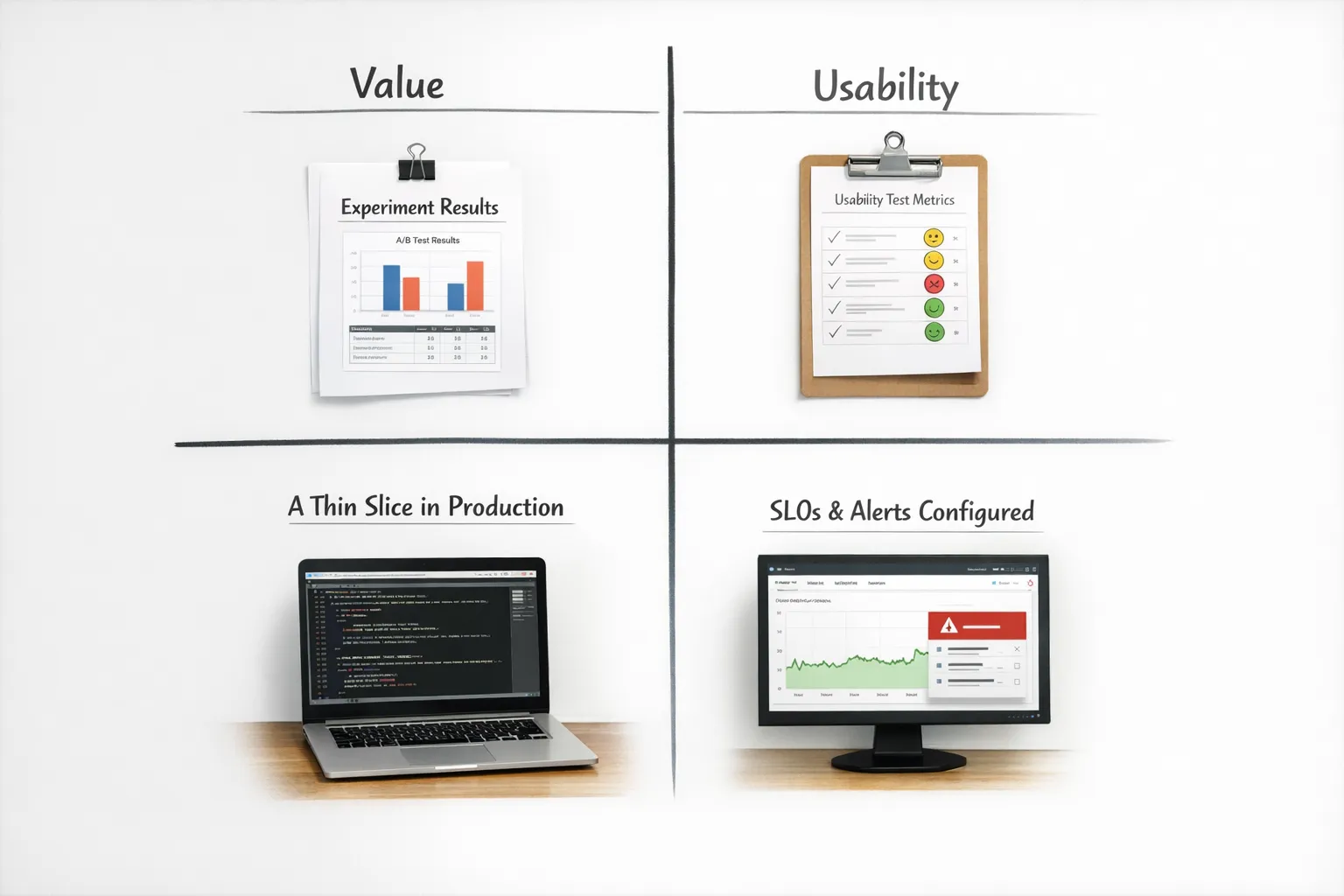

The four proofs that de‑risk delivery

Use these recurring proofs to decide what to build next and when to stop.

- Value proof, people want it enough to use it or pay for it.

- Usability proof, users can complete the task quickly and confidently.

- Feasibility proof, the design is technically and operationally achievable within constraints.

- Operability proof, you can run it reliably, measure it, and recover quickly when it fails.

Earn at least a small version of all four proofs before scaling.

Deliverables and gates at a glance

| Stage | Key deliverables | Proof you need | Gate to pass |

|---|---|---|---|

| 1. Frame | One‑page vision, NFRs, constraints, integration inventory | Shared understanding of value and boundaries | Executive and team alignment |

| 2. Validate | Interviews, prototype tests, MLVP scope | Evidence of adoption, clear smallest viable scope | Product sign‑off on MLVP |

| 3. Architect | ADRs, data model, API boundaries | Thin end‑to‑end design that meets NFRs | Architecture review |

| 4. Seed | Repo, CI, preview envs, DOR and DOD | Ship on every merge with basic telemetry | Dev readiness gate |

| 5. Slice | Golden path in prod, analytics, SLOs | End‑to‑end user success with observability | Slice release review |

| 6. MVP | Feature flags, hardening, runbooks | MVP outcomes met under expected load | Launch readiness review |

| 7. Launch | Cutover plan, comms, support | All launch gates green with rollback | Go‑live approval |

Compliance and security by default

Treat privacy and security as product features. Doing so avoids expensive rewrites and audit surprises.

- Use a security verification baseline like OWASP ASVS to guide requirements, authentication, session management, cryptography, logging, and more.

- Keep an audit trail of key decisions and changes, architecture decisions, access approvals, and policy updates.

- Shift left on controls, codify policies in CI where possible, for example dependency and container scanning, linting for secrets, and policy as code for infrastructure.

For day‑one security and privacy work, create a short checklist, data classification, retention policy, access model, audit log coverage, secrets management, and vendor due diligence.

Team shape and collaboration

Small, senior, cross‑functional teams ship faster and with fewer surprises.

- Product manager to own outcomes and scope, tech lead to own architecture and quality, designer to own flows and accessibility, full‑stack developers, test and QA, and cloud and DevOps.

- Operating rhythm, daily standup for flow, weekly risk review and demo for feedback, monthly outcomes review against metrics.

- Decision hygiene, short written proposals for non‑trivial choices and ADRs to preserve context.

Timeline patterns you can expect

Every build is unique, but patterns repeat. Scope and uncertainty drive duration more than lines of code.

| Scope | Typical focus | Indicative duration |

|---|---|---|

| Small MVP, single core journey | 1 or 2 integrations, moderate auth, basic analytics | 6 to 10 weeks |

| Medium MVP, multiple roles | 3 to 5 integrations, workflows, reporting | 12 to 16 weeks |

| Complex or heavily regulated | High assurance security, audit, migrations | 20 to 28 weeks |

These ranges assume a staffed senior team, continuous delivery, and a disciplined scope. Long‑tail integrations, data migrations, and regulatory reviews often dominate the schedule, plan for them early.

Common pitfalls and how to avoid them

Teams rarely fail because of one big mistake, they drift because of many small ones. Here is how to stay on track.

- Building for edge cases before the happy path works in production.

- Choosing microservices prematurely, start modular and extract only when scale or independence demands it.

- Deferring observability, you cannot improve what you cannot see.

- Neglecting data modeling, late changes ripple across APIs, jobs, and reports.

- Treating compliance as a final gate, begin classification, logging, and access reviews in sprint one.

- One‑time testing, rely on automation and continuous verification, not heroic hardening weeks.

- Skipping runbooks and on‑call, launch day should not be the first time you think about recovery.

- Over‑indexing on feature velocity without a clear outcome metric, measure value, not just output.

Metrics that matter at launch

Pick a small set that tells you if users are getting value and if the system is reliable and changeable.

- Adoption and activation, new accounts or users completing the first key task, time to value.

- Retention and engagement, weekly or monthly active users, task frequency, and cohort trends.

- Reliability, SLOs for latency and availability, error rate, and incident metrics, mean time to detect and restore.

- Change velocity and stability, lead time for changes and change failure rate, use a DevOps metrics lens to improve flow.

For the day‑two operation, bring product metrics and engineering metrics into the same weekly review so trade‑offs are explicit.

Your accelerated path with Wolf‑Tech

If you want a partner that ships working software while keeping quality, security, and compliance front and center, Wolf‑Tech can help. Our team brings full‑stack development, code quality consulting, legacy optimization, web application and custom software development, tech stack strategy, digital transformation guidance, cloud and DevOps expertise, and robust database and API solutions across industries.

A practical next step is to align on outcomes and get a thin vertical slice into production quickly. From there we iterate together using the four proofs to guide investment and scope.

For a companion checklist that pairs well with this playbook, see the Build a Web Application: Step‑by‑Step Checklist.

Frequently Asked Questions

How long does custom web application development take? It depends on scope and uncertainty. Small MVPs with a single core journey often ship in 6 to 10 weeks with a senior team, while complex or highly regulated builds can take several months. Integrations, data migrations, and compliance tend to drive timelines more than pure feature work.

What is the smallest viable scope for a successful launch? Focus on one end‑to‑end user journey that delivers clear value, then add only what eliminates adoption friction for early users. Measure activation and time to value, not feature counts.

Do I need microservices to scale? No. Start with a modular monolith that enforces boundaries in code and tests. Extract services only when there is a concrete need for independent scaling, deployment, or team autonomy.

How do we handle compliance without slowing delivery? Start early with data classification, access controls, logging, and policy as code. Keep a living evidence folder that updates as you ship. When regulatory scope is heavy or fast changing, an AI‑powered compliance management platform can help streamline regulatory watch and evidence collection while the product team focuses on features.

What are the biggest cost drivers to watch? Third‑party integrations, specialist assurance work, data migrations, and environments and infrastructure often dominate cost. Up‑front architecture choices, good observability, and early automation reduce long‑term spend.

Ready to turn your idea into a production‑grade web app with less risk and more momentum, start a conversation with Wolf‑Tech at wolf-tech.io. We will help you frame outcomes, choose a pragmatic architecture, and deliver a working slice to real users fast, then scale with confidence.