Developing Solutions: How to Validate Value Before Coding

Shipping the wrong thing is one of the most expensive ways to “go fast.” You burn engineering time, accumulate maintenance cost, and often end up with a product that users politely ignore.

A widely cited analysis by CB Insights found the top reason startups fail is “no market need” (42%). Even for established companies, the pattern is similar: teams build features, not outcomes, and only learn the truth after release. Source: CB Insights, The Top Reasons Startups Fail.

This guide is about developing solutions the smarter way: validating value before you code, so engineering effort is spent on changes that have a defendable business upside.

What “validate value” means (and what it does not)

Validating value is not the same as validating that:

- A stakeholder likes the idea

- A prototype looks modern

- A feature is technically possible

Value is validated when you have evidence that a specific user segment will change behavior in a way that produces a measurable outcome. Depending on your business, that outcome might be revenue, retention, time saved, risk reduced, conversion lift, fewer support tickets, or compliance automation.

A useful mental model is to separate four proofs:

- Value: does it solve a real, prioritized problem?

- Usability: can users successfully use it?

- Feasibility: can we build and operate it with our constraints?

- Viability: does it work for the business (pricing, margins, legal, brand)?

Before writing production code, you can often validate value and viability to a surprisingly high confidence level.

Start with a value hypothesis (not a feature list)

Most waste begins with feature-first thinking. Replace it with a value hypothesis that is easy to test.

A practical template:

For (target segment) who (have a painful, frequent problem) we believe that (solution approach) will (measurable outcome) because (reason / insight).

Example (B2B ops):

For warehouse supervisors who lose 30 to 60 minutes per shift reconciling inventory discrepancies, we believe that guided discrepancy resolution (photo + reason codes + approvals) will cut reconciliation time by 50% within 30 days because most exceptions follow 5 recurring patterns and currently require back-and-forth messages.

This format forces clarity on:

- Who it is for (not “everyone”)

- What pain you are solving (not “we need a dashboard”)

- How you will measure success

Map assumptions, then attack the riskiest one first

Before you build, list what must be true for the solution to succeed. Typical assumptions:

- Users experience the problem often enough to care

- Your proposed approach is preferable to the workaround they already use

- You can reach users and get the solution adopted (distribution)

- Buyers will pay (or internal stakeholders will fund it)

- The organization can support it (sales, onboarding, compliance, ops)

Then pick the assumption that is:

- High impact if false

- High uncertainty today

That is your first validation target.

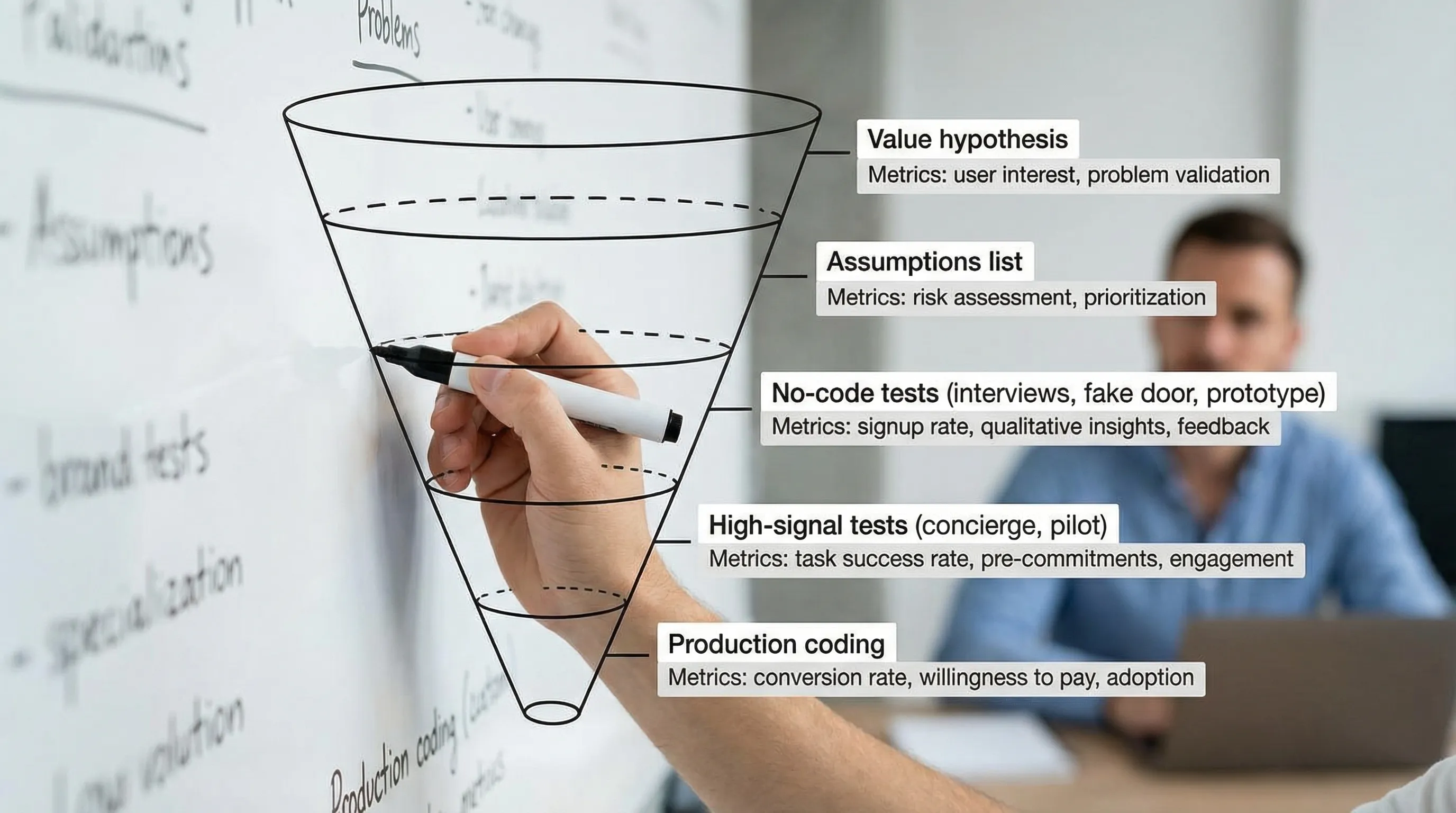

Validation methods you can use before coding

You do not need a single “perfect” method. You need fast evidence that reduces uncertainty.

Here is a practical menu you can combine.

1) Customer interviews that focus on past behavior

Interviews are valuable when they uncover what people already do, not what they claim they might do.

Strong prompts:

- “Walk me through the last time this happened.”

- “What did you do instead?”

- “What did it cost you (time, money, risk)?”

- “What have you tried already?”

Weak prompts:

- “Would you use an app that…”

- “Do you like this feature?”

If you want a structured approach, the Jobs To Be Done lens can help you focus on outcomes and switching triggers.

2) Problem ranking and willingness-to-change

After interviews, ask users to rank problems and trade-offs:

- “If we could fix only one thing this quarter, what should it be?”

- “What would you stop doing if this existed?”

- “What would you trust this system to do automatically, and what must stay manual?”

What you are looking for is not excitement, it is priority under constraint.

3) “Fake door” tests (demand without building)

A fake door test is when you present the option (button, menu item, pricing page, “request access”) and measure interest before implementation.

Common forms:

- Landing page with a clear promise + call to action

- In-product CTA (if you already have a product)

- Waitlist or “book a demo” flow

You measure:

- Click-through

- Signup rate

- Demo requests

- Quality of leads (fit, budget, urgency)

Ethics matter here: be transparent that it is early access or a pilot.

4) Concierge MVP (deliver the value manually)

A concierge MVP delivers the outcome without automation. You provide the result with spreadsheets, human ops, or internal tooling.

This is one of the best ways to validate value in B2B because it exposes:

- Real workflow friction

- Hidden stakeholders (approvers, compliance, finance)

- Data requirements you did not know existed

If users will not consistently show up for the manual version, automation will not save the concept.

5) Wizard-of-Oz (the UI looks real, the backend is not)

In a Wizard-of-Oz test, users experience a realistic flow, but the “intelligence” is behind the curtain.

This is useful for validating:

- The promise (value)

- The interaction (usability)

- The minimum data needed to deliver results

It also helps you avoid overbuilding “AI” features before you know which decisions users will delegate.

6) Prototype usability tests (Figma-level is often enough)

You can validate usability and comprehension with clickable prototypes, especially for:

- Onboarding

- Complex forms

- Multi-step workflows

- Permissioned admin areas

Nielsen Norman Group’s research library is a strong reference for usability testing practices: NN/g.

The key is to test realistic tasks, not “opinions.”

7) Pre-sales and LOIs (the strongest viability signal)

For B2B, one of the highest-signal validations is getting someone to commit:

- Paid pilot

- Letter of intent (LOI)

- Budget earmarked for a specific outcome

- Security review kickoff

You are not trying to maximize revenue here, you are validating that the pain is real enough for a buyer to act.

8) ROI sketch with a “kill threshold”

A lightweight ROI model can be done before coding. It is not about precision, it is about decision-making.

Typical inputs:

- Time saved per user per week

- Error reduction rate

- Conversion lift

- Reduced support volume

- Risk reduction (fewer incidents, compliance automation)

Then define a kill threshold. Example: “If we cannot credibly show at least $250k annualized impact within 90 days of launch, we do not build phase 2.”

If you want a deeper approach to ROI thinking, Wolf-Tech also covers budgeting and ROI drivers in Custom Software Development: Cost, Timeline, ROI.

A comparison table: validation options by speed and strength

Use this to pick the smallest test that answers your highest-risk question.

| Method | What it validates best | Speed | Cost | Evidence strength |

|---|---|---|---|---|

| Customer interviews (past behavior) | Problem reality, context, alternatives | Fast | Low | Medium |

| Fake door (landing page or in-product CTA) | Demand, messaging, top-of-funnel | Fast | Low | Medium |

| Prototype usability test | Comprehension, workflow usability | Fast | Low | Medium |

| Concierge MVP | Value in real workflows, adoption friction | Medium | Medium | High |

| Wizard-of-Oz | Value + usability with realistic flow | Medium | Medium | High |

| Pre-sales / paid pilot | Viability, urgency, budget | Medium | Low to Medium | Very high |

| ROI sketch with kill threshold | Economic case, decision clarity | Fast | Low | Medium |

Define success metrics before you start testing

Validation fails when teams run experiments without a definition of “success.” Decide upfront:

- Primary metric: the outcome you truly care about (for example, time-to-quote, onboarding completion, paid conversion)

- Leading indicator: something you can measure earlier (for example, demo requests, activation rate)

- Decision rule: what happens if the metric is below threshold

Example decision rule:

If fewer than 8 out of 15 qualified users complete the prototype task without assistance, we revise the workflow before building.

This simple discipline prevents endless “maybe” outcomes.

Common traps that make validation look successful (but are not)

Confusing enthusiasm with commitment

People are polite. They will say “nice idea.” Commitment looks like:

- Scheduling time to test

- Sharing real data

- Introducing you to the buyer

- Agreeing to a pilot

- Accepting a workflow change

Asking users to design the product

Users are excellent at describing pain and constraints. They are usually not great at designing solutions. Your job is to propose options and test outcomes.

Validating with the wrong segment

A solution can test “well” with friendly users and still fail in the real market. Define your segment with constraints (industry, role, company size, maturity, compliance posture) and recruit accordingly.

Ignoring the adoption surface

In B2B, the best feature can fail because:

- It requires a security review you cannot pass

- It changes an approval chain

- It breaks an existing integration

- It adds work for a team that does not benefit

Validation should include these stakeholders early.

A pragmatic 10-day validation sprint (no production code)

You can run a focused sprint that produces evidence, not slides.

| Day range | Goal | Output |

|---|---|---|

| Days 1 to 2 | Align on outcome and segment | One-page value hypothesis + success metrics |

| Days 3 to 5 | Discover real workflows and pain | 6 to 10 interviews, notes, problem ranking |

| Days 6 to 7 | Test demand and messaging | Landing page or in-product CTA + results |

| Days 8 to 9 | Test usability | Clickable prototype + task success rate |

| Day 10 | Decide | Go, pivot, or stop, with decision rules |

If the signal is strong, then you earn the right to write code.

How “validate value” connects to engineering decisions

Once value is validated, engineering can move faster because scope is anchored to outcomes.

This is where teams often make expensive mistakes like over-architecting early. A better pattern is:

- Build a thin, production-ready slice that proves end-to-end delivery

- Keep architecture simple unless constraints demand otherwise

- Make non-functional requirements explicit (latency, reliability, security)

Wolf-Tech has a practical, engineering-focused approach to this in:

- Building Apps: MVP Checklist for Faster Launches

- Developing Software Solutions That Actually Scale

- How to Choose the Right Tech Stack in 2025

Even if your headline goal is “before coding,” it helps to remember that the next step after strong validation is usually not a full build. It is a thin vertical slice that proves feasibility and operability with minimal scope.

When to bring in an experienced partner

Validation is cross-functional. It touches product, UX, engineering, data, and business strategy. External support makes sense when:

- You need a fast, structured discovery and validation process

- The solution requires complex integrations or legacy constraints

- You must align multiple stakeholders on measurable outcomes

- You want to avoid building a “prototype that becomes production” by accident

Wolf-Tech provides full-stack development and technology consulting for teams that need to validate, then build with a modern delivery and quality baseline. If you want help shaping the validation plan, pressure-testing assumptions, or translating validated value into a buildable scope, you can explore Wolf-Tech at wolf-tech.io.

Frequently Asked Questions

What is the fastest way to validate value before coding? The fastest combination is usually 6 to 10 customer interviews focused on past behavior plus a fake door test (landing page or in-product CTA). If you can add a paid pilot or LOI, that is an even stronger viability signal.

How many interviews do you need to validate a problem? There is no universal number, but you often see patterns emerge after 6 to 10 interviews within a clearly defined segment. The key is segment consistency, not volume.

What should I measure in a fake door test? Measure conversion to a meaningful commitment, not just clicks. Examples include demo requests, waitlist signups with firmographic qualification, or “request access” submissions.

Can you validate value with internal stakeholders only? You can validate alignment and constraints, but you cannot validate market value without real users (or at least real buyers). Internal validation is necessary, not sufficient.

When is it okay to start coding during validation? When the highest remaining risk is feasibility or integration uncertainty. Even then, start with a thin vertical slice that proves end-to-end delivery, not a broad feature build.

Turn validation into a build plan that ships

If you have a promising idea but want to avoid expensive misbuilds, Wolf-Tech can help you validate value, define measurable success metrics, and translate evidence into a scope your team can ship safely.

Explore Wolf-Tech’s services at https://wolf-tech.io and use the validation methods above to make sure your next build is tied to outcomes, not guesses.