Building Apps: MVP Checklist for Faster Launches

Most MVPs miss their launch date for the same reason: the team starts “building” before they have a shared definition of what the first release must prove, and what it must not break. The fix is not more process, it is a lightweight checklist that forces the highest-leverage decisions early, so you can move fast without creating rework, security gaps, or production fire drills.

This MVP checklist is designed for founders, product leaders, and engineering teams who want faster launches with fewer surprises. It assumes you are building a real product (web, mobile, internal platform, B2B SaaS), not a throwaway demo.

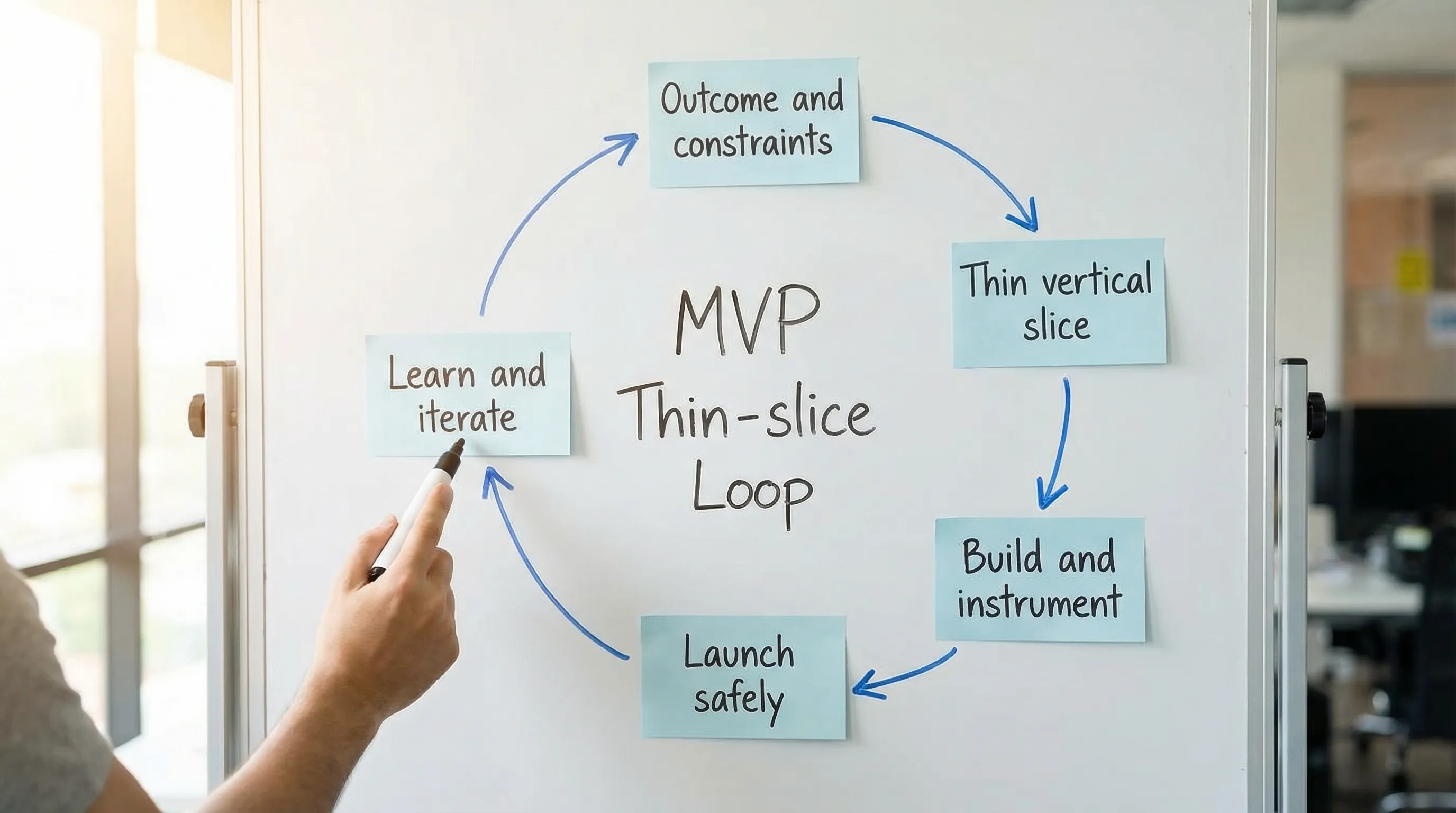

What “MVP” should mean in 2026

An MVP is not “the smallest feature set.” It is the smallest end-to-end product slice that can:

- Deliver a measurable outcome for a specific user

- Run safely in production (even if traffic is small)

- Generate learning you can act on (usage, drop-offs, revenue, time saved)

If you can’t operate it, observe it, or change it safely, you do not have an MVP, you have a prototype.

MVP checklist at a glance (use this to align stakeholders)

Use the table below as your “definition of ready to build” and “definition of ready to launch.” The goal is not to complete paperwork, it is to avoid costly backtracking.

| Checklist area | Why it speeds up launches | “Done” proof you can point to |

|---|---|---|

| Outcome and audience | Prevents scope creep and “feature soup” | 1 outcome metric, 1 primary persona, 1 key workflow |

| Thin vertical slice scope | Reduces parallel work and integration risk | A mapped flow from UI to data write, with explicit exclusions |

| Data and integrations | Avoids late surprises (auth, sync, third parties) | Data entities + 1–2 critical integrations validated |

| Architecture and stack baseline | Prevents rewrites and scaling myths | A short architecture note + initial repo structure |

| Security and privacy baseline | Avoids launch blockers and breaches | Threat sketch + authz model + secrets handling approach |

| Delivery system (CI/CD) | Makes shipping repeatable, reduces manual work | Automated build, tests, deploy to staging, rollback path |

| Quality gates and testing | Avoids flaky releases and long bug tails | Test pyramid decision + “definition of done” |

| Observability and operations | Prevents “we shipped but can’t debug” | Logs/metrics/traces + basic runbook + alerting thresholds |

| Launch plan and learning loop | Converts launch into evidence, not opinions | Feature flags + analytics events + feedback channel |

If you want a broader, end-to-end guide for web apps, pair this with Wolf-Tech’s Build a Web Application: Step-by-Step Checklist.

1) Outcome, users, and constraints (the anti-rework starter pack)

Checklist: Before you estimate anything, write these down in plain language.

- Primary user and job: “For (persona), help them (job) in (context).”

- One success metric: conversion, time saved, revenue, retention, error reduction.

- Constraints: compliance, data residency, SSO requirements, budget/timebox, legacy dependencies.

- Non-goals: what you explicitly will not solve in v1.

Why this speeds up: teams stop debating edge cases and stop shipping “nice to have” work that does not move the metric.

Practical tip: If stakeholders cannot agree on one success metric, you are not ready to build. You are still in discovery.

2) Scope the MVP as a thin vertical slice (not a feature list)

Fast launches come from finishing one complete slice, not starting ten parts.

Checklist:

- Choose one core workflow and map it end-to-end (screen, API, data, permissions).

- Define the “happy path” first, then list the top 3 failure modes you will handle.

- Write explicit exclusions (for example: “No bulk import in v1,” “No role delegation yet”).

- Decide what is “manual for now” (ops/admin tasks, onboarding steps, support).

A useful artifact is a “thin slice spec” that includes:

- Entry point (how the user starts)

- Steps and validations

- Data created/updated

- Notifications triggered (or not)

- Audit needs (if applicable)

If you want a stronger requirements-to-UI workflow, see Wolf-Tech’s guide on software designing from requirements to UI flows.

3) Data model and integrations (decide early, keep it minimal)

A large share of MVP delays come from data and external systems, not UI.

Checklist:

- Data entities: 5–12 core entities max for most MVPs.

- Source of truth per entity: your app vs a third party.

- Identity strategy: email/password, passwordless, SSO, enterprise SAML/OIDC.

- Integrations: pick 1–2 that unlock value now, defer the rest.

- Migration or import: if you need it, define the simplest possible path.

Speed principle: make the data model expressive enough for your workflow, but resist “future-proofing” relationships you do not use yet.

4) Architecture and tech stack baseline (choose defaults that reduce decisions)

You do not need a perfect architecture for an MVP, but you do need a coherent baseline so the team stops renegotiating fundamentals every week.

Checklist:

- Start with a modular monolith unless you have a proven reason not to.

- Define API style (REST, GraphQL, tRPC) based on team skill and product needs.

- Pick a deployment target (cloud provider, PaaS, container platform) that your team can operate.

- Document 3–5 architecture guardrails (for example: “No cross-module DB writes without a domain service”).

For a practical decision framework, use Wolf-Tech’s How to Choose the Right Tech Stack in 2025.

5) Security and privacy baseline (avoid launch-day blockers)

Security work does not have to slow you down, but skipping the basics will.

Checklist:

- Threat sketch: identify what you are protecting (accounts, payments, PII, IP) and likely attack paths.

- Authentication and authorization model: roles, permissions, and how they are enforced.

- Secrets management: never in source control, rotateable, environment-separated.

- Data protection: encryption in transit, encryption at rest where appropriate.

- OWASP basics: input validation, CSRF strategy, SSRF awareness, secure headers.

Authoritative references to align on:

Speed principle: define a “secure-by-default” template (project scaffolding, headers, auth middleware) so new code inherits safe defaults.

6) Delivery system (CI/CD) that makes shipping routine

If deployments are manual, launches are slow and stressful.

Checklist:

- Trunk-based development (or a tightly controlled alternative), so you avoid long-lived branches.

- Automated pipeline: build, lint, test, package, deploy.

- Environment strategy: dev, staging, production, with clear promotion rules.

- Rollback plan: how you revert fast (previous artifact, feature flags, DB-safe rollbacks).

- Infrastructure as code (even minimal) for repeatability.

Teams that optimize delivery performance often track DORA metrics (deployment frequency, lead time, change failure rate, MTTR). If you want the practical implementation side, start with Wolf-Tech’s CI/CD technology guide and the DORA research.

7) Testing and code quality gates (the minimum that keeps you fast)

For MVPs, the goal is not perfect coverage. The goal is fast feedback and stable releases.

Checklist:

- Definition of done includes tests for critical paths and basic negative cases.

- Testing strategy: a small number of high-value end-to-end tests, plus unit tests for core logic.

- PR size discipline: keep changes reviewable to avoid review bottlenecks.

- Static checks: formatting, linting, type checks, dependency scanning.

- Flaky test policy: fix or quarantine quickly.

A practical way to keep quality measurable without bureaucracy is to adopt a small scorecard. Wolf-Tech’s code quality metrics that matter is a strong reference for that.

8) Observability and operational readiness (so production is not a black box)

Many teams “launch” and then lose weeks to guessing what users are experiencing.

Checklist:

- Structured logging for key actions (with correlation IDs).

- Metrics for traffic, errors, latency (p95 matters), and saturation.

- Tracing if you have multiple services or external dependencies.

- SLO-lite: define 1–2 reliability targets for the MVP (for example, error rate, availability during business hours).

- Runbook v1: how to deploy, rollback, and troubleshoot the top 5 incidents.

If your MVP has meaningful backend complexity, use Wolf-Tech’s backend development best practices for reliability as a baseline.

9) Performance and UX budgets (small constraints, big speed gains)

Performance problems discovered late often force architectural changes, which are expensive.

Checklist:

- Define budgets: page load expectations, API p95 latency targets, payload size constraints.

- Measure early: lighthouse/lab tests are helpful, but real-user monitoring (RUM) is better as soon as you can.

- Accessibility basics: keyboard navigation, labels, contrast, focus states.

Speed principle: a simple budget in CI catches regressions early, when fixes are cheap.

10) Launch plan: feature flags, analytics, and support routing

A fast launch is a controlled rollout with a clear learning loop.

Checklist:

- Feature flags for risky capabilities and staged enablement.

- Analytics events tied to the outcome metric (activation, key action completed, conversion).

- Feedback channel: in-product prompt, support email, or pilot customer check-ins.

- Release checklist: data backups, incident contact, on-call window, comms plan.

A practical “MVP exit criteria” scorecard (copy/paste)

Use this as a lightweight gate before you call the MVP “ready.”

| Category | Exit criteria (minimum) | Common fast-launch pitfall |

|---|---|---|

| Scope | One workflow works end-to-end | Shipping partial features that cannot be used |

| Security | Authn/authz works, secrets safe, basic OWASP risks addressed | Adding security after integrations are already live |

| Delivery | CI/CD deploys reliably to staging and production | Manual deploy steps living in one person’s head |

| Quality | Critical path tests + PR review discipline | “We’ll test after we finish features” |

| Operability | Logs/metrics exist, runbook exists, rollback works | Launching without a way to debug or revert |

| Learning | Events + dashboard/report that answers “did it work?” | Collecting data that does not map to decisions |

Common mistakes that slow MVP launches (even with strong teams)

Over-scoping the first release: MVP expands to include every stakeholder request. Fix: enforce explicit exclusions.

Building for scale before usage exists: microservices, complex eventing, and multi-region setups without a proven bottleneck. Fix: choose the simplest architecture you can operate.

Late integration discovery: “We assumed the CRM API supports X.” Fix: validate critical integrations in week one with a thin spike.

No delivery system: teams burn days on deployments and environment drift. Fix: automate early, keep it boring.

No operational ownership: “Dev team ships, ops team handles it.” Fix: define who owns production health from the start.

Frequently Asked Questions

What should be included in an MVP checklist? An MVP checklist should cover outcome definition, thin-slice scope, data and integrations, security baseline, CI/CD, testing, observability, and a launch learning loop.

How do you scope an MVP for a faster launch? Scope one end-to-end workflow (a thin vertical slice), define explicit exclusions, and defer secondary features and integrations until after you validate the outcome metric.

How long should it take to build an MVP? It depends on complexity and constraints (integrations, compliance, legacy systems). The best predictor is whether you can deliver one thin slice quickly with automated delivery and clear scope.

Do MVPs need CI/CD and monitoring? Yes, if you are launching to real users. Even minimal CI/CD and basic logs/metrics reduce risk and speed up iteration by making releases repeatable and debuggable.

What’s the difference between an MVP and a prototype? A prototype demonstrates an idea. An MVP runs in production, has basic security and operability, and generates measurable learning about user value.

Launch faster (without gambling on quality)

If you want help turning this checklist into an executable plan, Wolf-Tech supports teams with full-stack development, tech stack strategy, code quality consulting, and cloud and DevOps enablement.

Start with a quick conversation at Wolf-Tech to review your MVP scope, risks, and fastest credible path to production.