Full Stack Development: What CTOs Should Know

Full stack development has become a default expectation for modern product delivery, but the term is often used loosely. For CTOs, the practical question is not “do we do full stack”, it is “how do we structure end-to-end ownership so we ship faster without accumulating hidden risk”. In 2026, that means aligning frontend, backend, data, cloud, and DevOps into a cohesive delivery system with clear interfaces, measurable reliability, and predictable change.

What “full stack development” should mean at CTO level

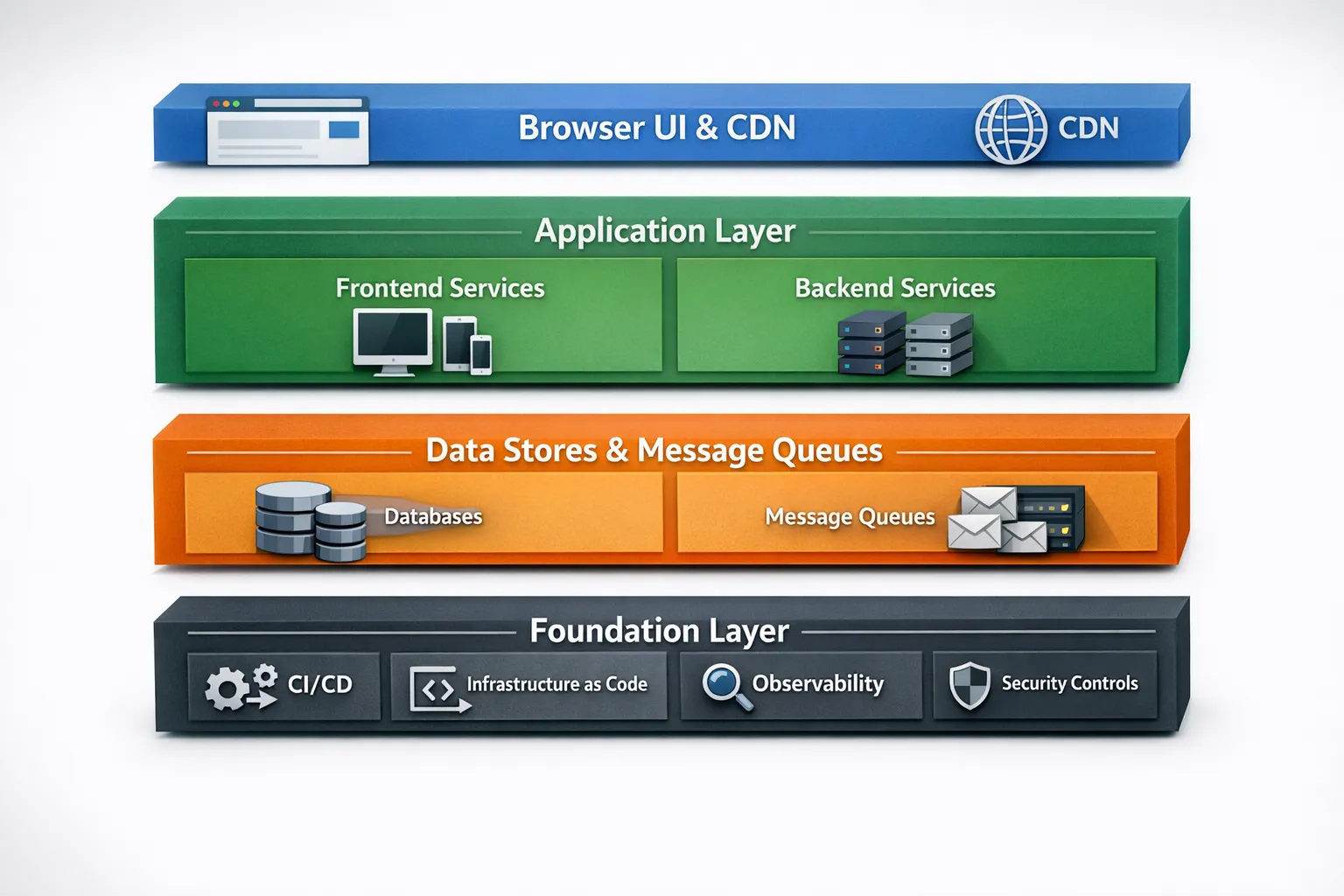

At CTO level, full stack development is less about individuals who can do everything, and more about teams that can deliver a customer outcome end-to-end. That includes:

- Product UI and UX implementation (web, mobile, accessibility, performance)

- Backend services and APIs (contracts, authorization, scaling characteristics)

- Data layer (schema design, migrations, query performance, lifecycle policies)

- Cloud and delivery (CI/CD, IaC, environments, secrets, observability)

- Quality and security controls (tests, code review, dependency hygiene, auditability)

A useful test is: can one team take a feature from a PRD to production, operate it, and improve it based on metrics, without handing off critical work to three other teams?

When the answer is “yes”, you have full stack capability. When the answer is “it depends who’s available”, you have heroic effort.

Full stack vs specialist teams: the trade most CTOs actually make

Many CTOs frame the choice as generalists versus specialists. In practice, most high-performing orgs build a T-shaped model:

- Product teams contain engineers who can work across boundaries (frontend to API, API to data)

- Specialists (security, platform, SRE, data engineering) provide guardrails, reusable primitives, and high-leverage support

The risk of pushing “everyone must be full stack” is that you can accidentally create a shallow organization where:

- Frontend performance and accessibility regress

- Data design becomes incidental, which later becomes expensive

- Delivery pipelines are fragile, because “DevOps” is treated as a part-time task

The risk of hyper-specialization is the opposite:

- Hand-offs multiply

- Ownership is unclear

- Lead time increases even when teams are staffed

The CTO goal is fast flow with bounded risk, not ideological purity.

The CTO checklist: what to clarify before you scale full stack delivery

1) Define the “stack” in business terms, not tooling terms

Before you discuss frameworks, align on what the business needs from the system:

- Availability and recovery targets (SLOs, RTO/RPO)

- Data sensitivity and compliance obligations

- Peak traffic patterns and latency budgets

- Integration surface (partners, internal platforms, data exports)

- Release expectations (daily iteration vs quarterly change windows)

Tooling follows from those constraints. If you want a deeper decision framework for selecting technologies, Wolf-Tech’s guide on how to choose the right tech stack in 2025 is a solid companion, but your first step is still the outcomes and constraints.

2) Decide where you want strong boundaries

“Full stack” should not mean “tangled”. CTOs should explicitly choose boundaries such as:

- Domain boundaries (what belongs in which service or module)

- API boundaries (contracts, versioning, deprecation policy)

- Data boundaries (ownership of tables, events, and migrations)

- Operational boundaries (who owns on-call, dashboards, runbooks)

If boundaries are implicit, teams will redraw them under pressure, and you will pay for it in incidents and slowdowns.

3) Treat operational readiness as part of the stack

A feature is not “done” when it merges. It is done when it is observable and supportable.

CTO-level full stack development includes:

- Structured logging and trace context

- Service-level indicators (latency, error rate, saturation)

- Alerting that maps to user impact

- Runbooks and safe rollout patterns

If you want a neutral baseline for reliability language, Google’s SRE concepts are still the most referenced starting point for SLO thinking (see Google’s SRE resources).

Where full stack projects succeed or fail (and what to check)

The failure modes are remarkably consistent. The table below is a practical way to review full stack capability without getting lost in buzzwords.

| Stack area | What a full stack team must decide | Common failure mode | CTO check that prevents it |

|---|---|---|---|

| Frontend | Rendering strategy, caching, accessibility, performance budgets | Slow UX, inconsistent UI, inaccessible flows | Require Lighthouse and Core Web Vitals targets, enforce design system usage |

| APIs | Contract shape, authZ/authN, error semantics, versioning | Breaking clients, inconsistent auth, “mystery 500s” | Enforce API standards (OpenAPI/GraphQL schema), contract tests |

| Data | Schema ownership, migrations, indexes, retention | Slow queries, fragile migrations, unclear ownership | Require migration playbooks, query review, data lifecycle policy |

| Delivery | CI/CD flow, environment strategy, secrets, release safety | Manual steps, snowflake environments, risky deploys | Minimum CI gates, IaC for environments, progressive delivery |

| Observability | Logs, metrics, traces, alerting thresholds | Alert fatigue, blind incident response | SLO-based alerts, on-call runbooks, incident reviews |

| Security | Dependency policy, secrets handling, threat modeling | Leaky secrets, vulnerable dependencies, weak auth | Follow OWASP guidance and SSDF-style controls, automate checks |

For application security baselines, OWASP’s ASVS is a practical reference for defining what “good” looks like without turning every discussion into opinion.

How to hire and structure for full stack delivery (without hiring unicorns)

A common mistake is writing job descriptions that require senior-level expertise across every layer. That tends to either:

- Narrow the candidate pool to a tiny set of expensive hires, or

- Result in mismatches where candidates are strong in one layer and underprepared elsewhere

Instead, define what “full stack” means for your context.

Define the minimum cross-stack capability

For most CTOs, a good baseline is:

- Engineers can take a feature from UI to API to persistence with guidance

- Engineers can debug production issues using logs and traces

- Engineers understand basic cloud primitives even if they are not platform specialists

Then decide where you require deep specialists (examples: high-scale performance engineering, security engineering, complex data infrastructure).

Use a team topology that protects flow

You do not need an organizational overhaul to improve full stack delivery, but you do need clear ownership. Patterns that work well:

- Stream-aligned product teams with end-to-end responsibility for a business domain

- A small platform or enablement function that builds paved roads (CI templates, deployment patterns, observability defaults)

- Embedded security and quality practices (not gatekeeping teams that only say “no”)

Interview for systems thinking, not tool trivia

Good full stack engineers consistently demonstrate:

- Ability to reason about tradeoffs (latency vs cost, flexibility vs consistency)

- Comfort reading unfamiliar code and improving it safely

- Pragmatic debugging skill (hypothesis-driven, using instrumentation)

- Communication that clarifies assumptions

A simple way to test this is a “feature extension” exercise: provide a small app and ask the candidate to add a cross-cutting capability (authorization rule plus UI behavior plus a migration) while keeping it testable.

The delivery practices that make full stack teams high leverage

1) Ship thin vertical slices

Full stack delivery shines when teams can ship a narrow feature end-to-end and validate it in production.

Thin slices force early integration and reduce the risk of late surprises in:

- Authentication and authorization

- Performance bottlenecks

- Data modeling mistakes

- Release and rollback issues

This is also where engineering leaders get the most trustworthy timeline signal.

2) Make quality observable, not aspirational

Quality problems in full stack systems rarely come from a single bad decision. They come from small compromises that accumulate.

CTO-level guardrails that consistently help:

- Trunk-based or short-lived branching, with mandatory code review

- Automated tests that cover behavior changes at the right level

- Static checks that are fast enough to run every PR

- A clear definition of “done” that includes observability and rollout safety

DORA metrics are frequently used to quantify delivery performance (deployment frequency, lead time for changes, change failure rate, time to restore). The research program is widely referenced via DORA’s site, and the metrics are useful precisely because they are hard to game when implemented honestly.

3) Standardize the boring parts

Full stack teams slow down when every project reinvents:

- Auth and permissions patterns

- API response conventions

- Logging and tracing setup

- Deployment pipelines

Standardization does not mean forcing everyone onto one framework. It means creating defaults and templates so teams spend time on product value.

Full stack development in the real world: legacy code and modernization

CTOs rarely get to build on a greenfield. Full stack development becomes a strategic asset when it helps you modernize safely while continuing to deliver features.

The practical approach is to treat legacy modernization as an end-to-end product: improve the system while keeping it running.

A full stack team working in legacy territory should be able to:

- Add tests that lock behavior before changing internals

- Introduce seams (interfaces, adapters) to reduce blast radius

- Improve modules incrementally without breaking integration contracts

- Upgrade delivery safety (feature flags, canary releases) before large refactors

If you need a deeper modernization playbook, Wolf-Tech has detailed guides on code modernization techniques and modernizing legacy systems without disrupting business.

Using market signals to guide full stack priorities

CTOs often have good internal telemetry (product analytics, support tickets, incident reviews), but weaker external telemetry (what prospects and competitors are saying). For many B2B products, Reddit is a surprisingly high-signal channel for:

- Feature comparison threads

- “How do I solve X?” pain points

- Migration and tooling discussions

If your team wants to systematize that without adding manual workload, tools like Redditor AI can help monitor relevant conversations and surface leads and topics, which can then feed your roadmap, content strategy, and even competitive positioning.

When to use an external full stack partner (and how to do it without regret)

Even strong internal teams hit capacity ceilings, especially during:

- A new product line launch

- A legacy modernization initiative with strict continuity requirements

- A platform migration (cloud, data layer, identity)

- A security or reliability push where timelines matter

A partner can add leverage, but only if the engagement is structured to produce durable capability, not just output.

CTO guidance that prevents disappointment:

- Require a short discovery that results in concrete artifacts (architecture notes, risks, thin-slice plan)

- Start with a production-grade vertical slice, not slideware

- Insist on operational readiness (dashboards, runbooks, on-call expectations) from day one

- Make knowledge transfer explicit (pairing, documented decisions, reproducible environments)

If you are evaluating vendors, Wolf-Tech’s guide on how to vet custom software development companies provides a structured way to request proof, not promises.

A pragmatic 30-60-90 day plan for CTOs upgrading full stack capability

Days 1-30: Baseline reality

Capture where you are, without judgment:

- Map your delivery flow from idea to production and identify the biggest wait states

- Pick 1 or 2 key services and document ownership boundaries (domain, API, data)

- Establish initial metrics (DORA metrics plus 1 or 2 user-facing SLOs)

Days 31-60: Install guardrails that improve speed

Focus on changes that remove friction:

- CI/CD minimum gates (tests, linting, dependency checks)

- Observability defaults (logs, traces, dashboards) in templates

- A standard API and error-handling convention

Days 61-90: Prove it with a thin slice

Choose a meaningful feature and deliver it end-to-end:

- Ship behind a flag and roll out progressively

- Measure performance and reliability outcomes

- Run a short retro focused on flow, not blame, then update standards

This gives you a repeatable system, not a one-off success.

How Wolf-Tech supports CTOs building full stack capability

Wolf-Tech specializes in full-stack development and technical growth, with over 18 years of experience supporting teams across modern stacks and industries. Engagements typically center on building, optimizing, and scaling real systems, including:

- Full-stack development for web applications and platforms

- Code quality consulting and engineering standards

- Legacy code optimization and modernization planning

- Cloud, DevOps, databases, and API solutions

- Tech stack strategy and digital transformation guidance

If you want to pressure-test your current approach, a high-leverage starting point is a short assessment that identifies the biggest constraints to end-to-end delivery (architecture, delivery pipeline, quality gates, reliability, team topology), then turns it into a thin-slice execution plan. Learn more about Wolf-Tech at wolf-tech.io.