How to Vet Custom Software Development Companies

Hiring the wrong partner is expensive, slow, and hard to unwind. Hiring the right one can compress timelines, reduce risk, and set you up for long‑term velocity. This guide gives you a practical framework, questions, and proofs to request when you vet custom software development companies in 2025. Use it to separate polished sales talk from operational reality.

Start with outcomes, then constraints

Before you evaluate vendors, define success for your initiative. Good partners will pressure test these inputs and improve them.

- Business outcomes: revenue goals, cost reduction, customer KPIs, compliance outcomes.

- Scope and boundaries: must‑have capabilities, integrations, data domains, rollout phases.

- Non‑functional requirements: availability targets, latency, privacy, accessibility, analytics.

- Practical constraints: budget ranges, timeline windows, team availability, dependencies.

- Risk posture: regulated data, data residency, vendor lock‑in tolerance, change management.

For a deeper primer on aligning technology with outcomes, see How to Choose the Right Tech Stack in 2025.

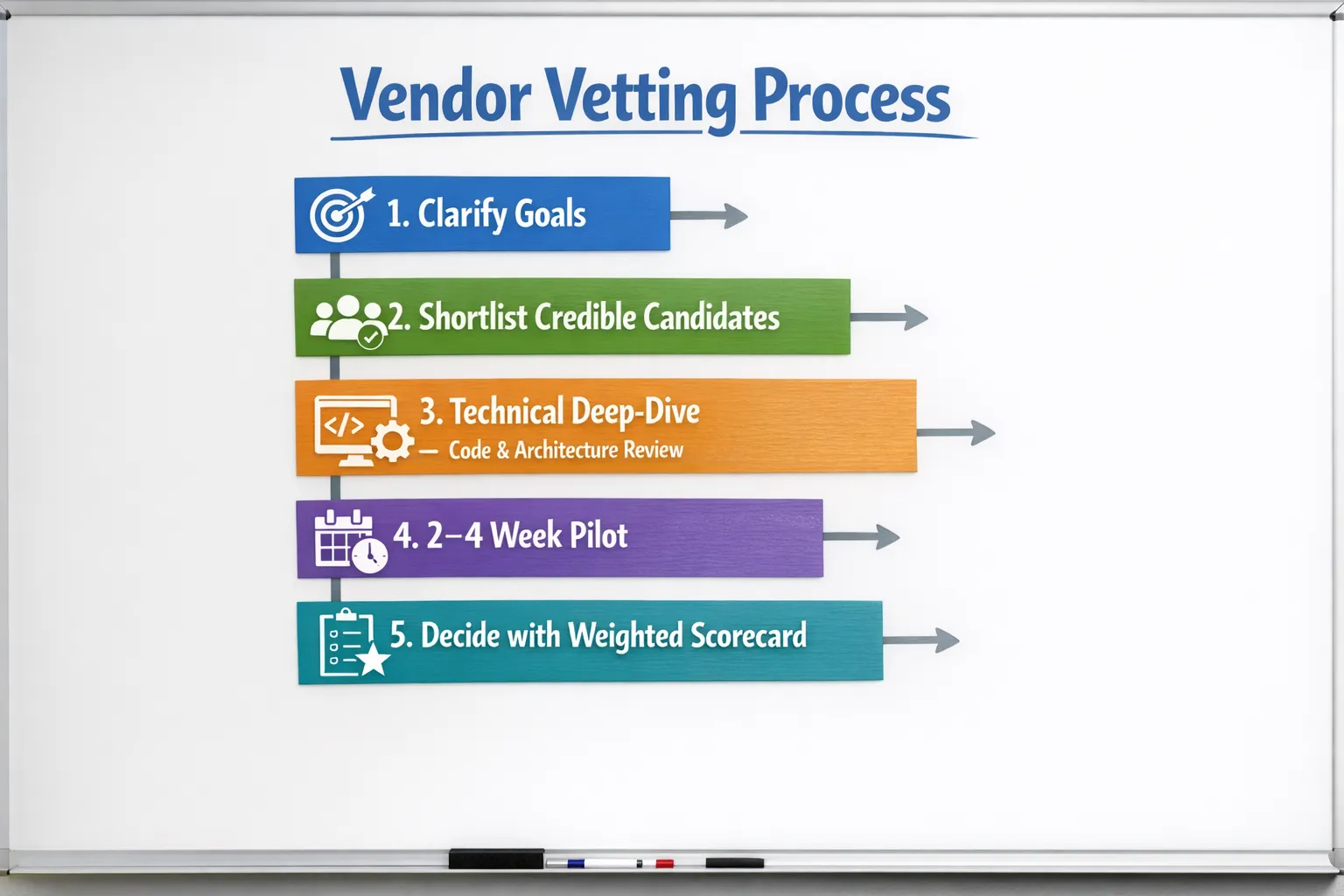

Build a credible shortlist

- Look for problem and industry adjacency, not just generic portfolios. Prioritize companies that have shipped similar scale, complexity, or compliance.

- Scan for public technical signals, open source contributions, talks, writing, and proof of modern practices.

- Ask for two relevant case studies, ideally with measurable outcomes and constraints similar to yours.

- Avoid giant RFP blasts. Use a concise RFI to filter, then run a structured deep dive with 3 to 5 vendors.

If your focus is web apps, this companion checklist helps frame criteria, Top Traits of Web Application Development Companies.

A practical vetting framework, eight dimensions to score

Below are the dimensions that reliably predict delivery quality. For each, we list what good looks like and proof to request.

1) Discovery and product thinking

- Evidence of problem framing, user research plans, and success metrics, not just features and hours.

- Clear assumptions, risks, and a lean delivery plan that reduces uncertainty early.

- Proof to request: a sample discovery agenda, a one‑page outcomes hypothesis, a backlog slice with acceptance criteria.

2) Engineering fundamentals

- Modularity, clean boundaries, testable units, and explicit architecture decisions.

- Automated tests, code reviews, CI checks, and trunk‑based development.

- Familiarity with the DORA four key metrics, deployment frequency, lead time, change failure rate, time to restore, and why they matter. See the DORA research.

- Proof to request: sanitized repo snapshot or code excerpt, CI pipeline config, a couple of Architecture Decision Records, test coverage summary, and a simple definition of done.

3) Security and compliance by design

- Secure SDLC aligned with NIST SSDF and OWASP ASVS.

- Supply chain integrity, SBOMs and provenance, for example SLSA guidance, see slsa.dev, dependency and container scanning, secrets management.

- Appropriate certification posture, SOC 2 Type II or ISO/IEC 27001 if they will process sensitive data.

- Proof to request: secure coding standards, vulnerability management workflow, sample SBOM, incident response playbook, latest attestations or audit letters when applicable.

4) Cloud, DevOps, and reliability

- Infrastructure as Code, environment parity, and repeatable provisioning.

- Observability baked in, metrics, logs, tracing, SLOs and error budgets, on‑call expectations.

- Cost awareness and right‑sizing patterns, plus a plan for predictable environments.

- Proof to request: example Terraform or similar IaC snippet, runbook for a critical service, monitoring dashboards description, cost estimation assumptions.

5) Data and API design

- Clear domain model boundaries, data quality rules, and retention policies.

- Stable API contracts, versioning strategy, pagination and rate limits, backward compatibility.

- Proof to request: a sample OpenAPI spec, data model diagram, performance or load test outline.

6) UX, accessibility, and performance

- Inclusive design and WCAG 2.2 AA awareness, performance budgets, design systems and component reuse.

- Proof to request: accessibility statement, example audit checklist, a Lighthouse budget or equivalent performance thresholds.

7) Delivery management and communication

- Cadence of planning and demos, transparent risk logs, proactive stakeholder management, timezone overlap plan.

- Team composition clarity, seniority mix, backfills, and explicit stance on subcontractors.

- Proof to request: RACI and communication plan, sample status report, staffing plan with named roles.

8) Commercials, legal, and risk

- Pricing model fit for uncertainty, time and materials for discovery, outcomes‑based or milestones for later phases.

- Change control that does not punish learning, IP assignment and licensing clarity, data processing and privacy terms, warranties and acceptance criteria.

- Proof to request: SOW template with deliverables definition, sample change request, DPA template if relevant.

Run a hands‑on pilot to validate claims

Verbal assurances are not enough. Ask for a 2 to 4 week pilot that tackles a thin vertical slice, a small end‑to‑end capability that exercises architecture, data, UI, deployment, and quality gates.

Pilot acceptance criteria to consider:

- Working software deployed to a staging environment you can access.

- Test and CI pipeline running on each commit, with meaningful checks.

- Lightweight docs, ADRs and a readme that let a new engineer get set up in under 30 minutes.

- Observability in place for the slice, basic metrics and logs visible.

- A demo with Q&A and a candid retro that surfaces risks and next steps.

Ask these verification questions

- What did you learn from a project that went sideways, and how did you adapt your process afterward?

- Show a before and after of a legacy modernization. What debt was retired and how was risk controlled?

- Which DORA metrics do your teams track, how, and what did you change due to the data?

- How do you handle secrets, credentials, and dependency vulnerabilities across projects?

- What is your policy on AI‑assisted coding and data privacy, and how do you prevent IP leakage?

- When and why do you choose a modular monolith versus microservices?

For modernization‑heavy work, these deep dives help, Refactoring Legacy Software: From Creaky to Contemporary and Code Modernization Techniques: Revitalizing Legacy Systems.

Common red flags

- Vague proposals with a lot of tools and frameworks, but no outcome or risk framing.

- No code samples, no ADRs, and no CI screenshots even after NDA.

- Every question is answered with microservices, serverless, or a favorite framework, regardless of your context.

- Security is framed as a late phase. No mention of OWASP ASVS, threat modeling, or SBOM.

- Senior team presented in sales, junior team staffed in delivery, bench is a mystery.

- “Fixed scope, fixed price” for ambiguous problems, with change control that penalizes learning.

A vendor scorecard you can reuse

Tailor weights to your priorities, then score vendors after the deep dive and pilot.

| Category | What good looks like | Proof to request | Weight |

|---|---|---|---|

| Discovery and product thinking | Outcomes, risks, lean backlog | Discovery agenda, outcomes hypothesis | 15 |

| Engineering fundamentals | Tests, ADRs, CI, reviews, clean boundaries | Repo excerpt, CI config, coverage summary | 20 |

| Security and compliance | Secure SDLC, SSDF, ASVS, SBOM, audits | Policies, SBOM, incident playbook, attestations | 15 |

| Cloud and DevOps | IaC, SLOs, observability, cost awareness | IaC snippet, runbook, dashboard overview | 10 |

| Data and APIs | Versioning, contracts, data quality | OpenAPI, schema, perf test plan | 10 |

| UX and accessibility | WCAG 2.2 AA, performance budgets | Accessibility checklist, perf budget | 5 |

| Delivery and communication | Cadence, risk transparency, staffing clarity | Status sample, RACI, staffing plan | 10 |

| Commercials and legal | Fair change control, IP clarity, DPA | SOW template, change request, DPA | 10 |

| References and outcomes | Relevant success with metrics | Two references with contact, case study | 5 |

Total weight 100.

RFI and RFP, keep them lean

- RFI goal, filter quickly. Ask for domain fit, team composition, security posture, and two case studies.

- RFP goal, structure a solution proposal. Provide a crisp problem statement, constraints, key non‑functional requirements, a sample data model or API, compliance needs, and acceptance criteria examples.

- Require a 1 to 2 hour technical Q&A so vendors can clarify and avoid guesswork.

If you are planning a greenfield web app, this starter guide helps structure inputs, Web Apps Development: A Practical Starter Guide.

Validate portfolios and references the right way

- Speak with a sponsor and an engineer from the reference account. Ask what surprised them, and what they would change.

- Ask for change failure rate and lead time trends during the engagement. Look for improvement over time, not perfection.

- Verify who actually did the work, full‑time employees or subcontractors, and how continuity is handled.

AI usage and IP safety in 2025

- Ask for the vendor’s AI policy, which tools are allowed, what telemetry is disabled, and how prompts and code are protected.

- Require a stance on training data, license compliance, and how generated code is reviewed for legal and security risks.

- Ensure sensitive data never leaves approved boundaries, and that code assistants are used in enterprise modes when available.

Engagement models and staffing mechanics

- Time and materials fits discovery and evolving scope, milestone‑based pricing can work once risk is retired.

- Insist on visibility into named roles, seniority mix, and a plan for backfills and holidays.

- Clarify timezone overlap expectations and meeting cadence.

Contract must‑haves

- Clear deliverables and acceptance criteria, with objective tests where possible.

- IP assignment, open‑source license compliance, and third‑party licensing responsibilities.

- Data Processing Agreement when needed, breach notification timelines, and security obligations.

- Service levels and incident response expectations for production support.

- Reasonable termination rights and knowledge transfer obligations to avoid lock‑in.

Total cost of ownership, not just day rates

- Environment and infrastructure costs, environments per stage, data storage, observability, and CI runners.

- Ongoing support, warranties, bug fixes, and maintenance posture.

- Paid discovery and pilots that retire risk early often reduce the overall spend.

Where Wolf‑Tech fits

Wolf‑Tech specializes in custom digital solutions, full‑stack development, legacy code optimization, code quality consulting, and cloud and DevOps expertise. If you want a second set of eyes on a proposal, a code quality assessment, or a modernization approach, we can help you de‑risk before you commit. Explore our engineering approach in Next.js Best Practices for Scalable Apps, and see how we think about modernization in Refactoring Legacy Applications: A Strategic Guide.

Frequently Asked Questions

How many vendors should I evaluate? Three to five is usually enough to see meaningful differences without analysis paralysis.

What if vendors refuse to share code samples? Ask for sanitized excerpts under NDA or a small pilot repository. If they still refuse, that is a signal.

Do I need a fixed price to control budget? Fixed price can work for well‑defined scopes. For discovery or innovation, time and materials with tight milestones and demos often delivers better outcomes.

Which certifications matter most? It depends on your data and industry. SOC 2 Type II or ISO/IEC 27001 are common for security management. Map app‑level controls to OWASP ASVS and secure SDLC guidance like NIST SSDF.

How long should a pilot be? Two to four weeks is typical. The goal is to validate fit and working modes, not to build production scope.

How do I compare nearshore versus offshore versus onshore? Optimize for overlap hours, communication quality, and seniority continuity. Rates are only one part of the equation.

What KPIs should I ask for during delivery? Track lead time, deployment frequency, change failure rate, and time to restore. Add SLOs for key user journeys.

What is a reasonable warranty? Many vendors offer a defects warranty window. Clarify scope, timelines for fixes, and how severity is classified in your SOW.

Ready to de‑risk your vendor choice?

Use the scorecard above to run a consistent process, validate with a hands‑on pilot, and ask for evidence that connects to your outcomes. If you want help pressure testing proposals or shaping a low‑risk delivery plan, talk to Wolf‑Tech. We bring 18 years of full‑stack experience, code quality consulting, legacy optimization, and cloud and DevOps expertise to help you pick the right path. Start the conversation at Wolf‑Tech.