Custom Software Outsourcing: Risks and Best Practices

Custom software outsourcing can be a force multiplier. It can also become the most expensive way to learn that your requirements were fuzzy, your quality bar was implicit, and your risk controls were assumed rather than implemented.

In 2026, outsourcing risk is less about “time zones” and more about operating a distributed delivery system: aligning outcomes, designing the right interface between teams, and making security, quality, and operability measurable from week one.

What “custom software outsourcing” actually includes

Most teams use “outsourcing” to mean one of three models (and mixing them without clarity is where problems start):

- Project delivery: the vendor owns delivery for a defined scope, with shared product ownership.

- Team augmentation: external engineers join your team, you own delivery and architecture decisions.

- Hybrid / capability-based: outsource a bounded domain (for example, payments integration, data pipeline, mobile app) with explicit contracts and interfaces.

The right model depends on how well-defined the problem is, how critical the system is, and how much internal leadership you can realistically allocate.

A useful rule: the more business-critical and ambiguous the work, the more you want internal product and architecture ownership, even if execution is external.

The real risks of custom software outsourcing (and how they show up)

Outsourcing risks are predictable. The mistake is treating them as “vendor issues” instead of system design issues. The table below maps common failure modes to concrete mitigations and the evidence you should request.

| Risk category | How it shows up in real projects | Best-practice mitigation | Evidence to ask for |

|---|---|---|---|

| Misaligned outcomes | Velocity looks good, but business impact is unclear, roadmap churn, constant rework | Define measurable outcomes and “definition of done” that includes quality and operability | One-page outcome brief, acceptance criteria, release gates |

| Requirements gap | “Done” means different things to different people, edge cases missed, UAT becomes a spec-writing phase | Time-boxed discovery and a thin vertical slice before scaling | Discovery deliverables, demo of vertical slice, updated backlog |

| Architecture drift | Inconsistent patterns, brittle coupling, performance regressions, hard-to-change code | Architecture guardrails (ADRs, reference patterns, review checkpoints) | ADR log, reference repo, architecture review notes |

| Quality debt | Bugs pile up, QA becomes a bottleneck, releases slow down | CI with automated tests, code review standards, quality metrics | CI pipeline, coverage approach, static analysis, PR policy |

| Security gaps | Late “penetration test panic”, secrets in repos, weak authZ | Security-by-design aligned to known standards | Secure SDLC notes, threat model, OWASP plan, dependency scanning |

| Supply-chain risk | Unvetted dependencies, compromised packages, opaque builds | SBOM, provenance, signed artifacts where appropriate | SBOM approach, SCA tooling, build provenance plan |

| Vendor lock-in | Only the vendor can deploy, docs are thin, key knowledge lives in Slack | Ownership rules, documentation minimums, runbooks, pairing | Runbooks, onboarding doc, “you can deploy it” test |

| Cost overrun | Scope expands, change requests escalate, you pay for uncertainty | Incremental funding with milestone-based checkpoints | Milestone plan, burn-up/burn-down, change control |

| Compliance/regulatory miss | Audit requests become last-minute fire drills | Map controls early (logging, retention, access, SDLC evidence) | Control mapping doc, access logs, audit-ready SDLC artifacts |

For security and application risks, it helps to anchor your expectations to widely used references like the OWASP Top 10 and the NIST Secure Software Development Framework (SSDF). You do not need heavyweight bureaucracy, but you do need explicit controls.

Best practices before you sign (where most risk is won or lost)

1) Write an outcome brief, not a feature wishlist

A strong outsourcing engagement starts with a short document that answers:

- What business outcome are we driving, and how will we measure it?

- What are the non-functional requirements (availability, latency, privacy, auditability, RTO/RPO)?

- What integrations, data sources, and operational constraints exist today?

If you want a deeper approach to scoping and value, Wolf-Tech’s guide on turning requirements into outcomes is a useful companion: Business Software Development: From Requirements to Value.

2) Choose an engagement model that matches uncertainty

The most common mismatch is using a fixed-scope commercial structure for a high-uncertainty problem. If you are still discovering the solution, optimize for learning and control.

A practical approach:

- Use time and materials for discovery and early iterations.

- Introduce milestone-based checkpoints once uncertainty drops.

- Keep a clear change control mechanism that protects both sides.

If you need budgeting guidance, see: Custom Software Development: Cost, Timeline, ROI.

3) Run a paid pilot that includes real engineering

Slide decks do not predict delivery. A short pilot (often 2 to 4 weeks) should prove that the vendor can:

- Work in your repo and toolchain (or set one up cleanly)

- Ship a thin vertical slice with CI, tests, and review practices

- Communicate clearly and surface risks early

If you want a structured way to evaluate vendors without turning it into a months-long procurement exercise, you can reuse the approach from How to Vet Custom Software Development Companies.

4) Decide IP, data, and AI usage rules explicitly

In 2026, you should assume AI-assisted development will occur somewhere in the chain. That is not automatically bad, but it must be governed.

Clarify in writing:

- What data can be used in AI tools (and what cannot)

- How proprietary code and customer data are handled

- Who owns work product, including generated artifacts

- How third-party licenses are managed

If your organization is regulated or handles sensitive data, you may also need controls for model access, prompt logging, and retention.

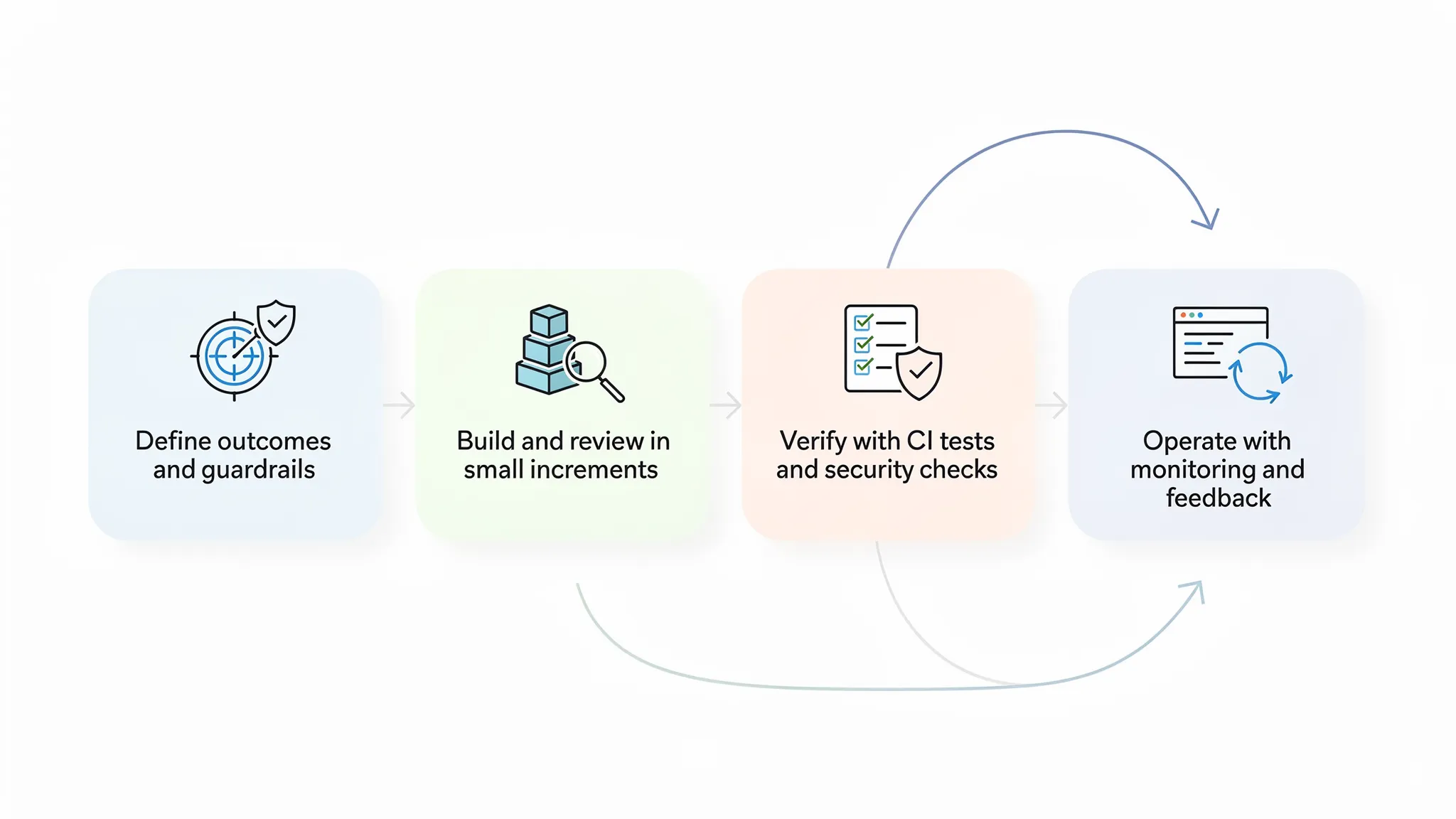

Best practices during delivery (how to keep control without micromanaging)

Establish a lightweight governance cadence

Outsourcing works when decisions are fast, priorities are stable enough for delivery, and risks are surfaced early.

A practical governance setup looks like this:

| Meeting / artifact | Frequency | Purpose | Output |

|---|---|---|---|

| Delivery sync | 2 to 3 times per week | Unblock work, coordinate dependencies | Updated tasks, risk list |

| Product review / demo | Weekly | Validate outcomes, prevent misbuild | Recorded demo, acceptance notes |

| Architecture and quality review | Biweekly | Keep consistency and manage technical risk | ADRs, action items |

| Security and compliance check | Monthly (or per release) | Ensure controls are real, not aspirational | Evidence, fixes queued |

| Steering checkpoint | Monthly | Confirm ROI and direction at the executive level | Decision log, roadmap update |

The key is that decisions are documented (briefly) and the backlog reflects those decisions.

Make quality measurable (and shared)

A vendor cannot “care about quality” into existence. You need a delivery system that makes quality visible.

At minimum, require:

- Pull request reviews with explicit standards (small PRs, test expectations, security review triggers)

- CI that runs on every change

- A testing strategy that includes unit tests plus higher-level tests where it matters (integration/contract)

- A release process that includes rollback and incident ownership

Tracking a small set of delivery metrics helps you see trouble early. DORA-style metrics (lead time, deploy frequency, change failure rate, time to restore) are a proven baseline and are documented at DORA.

Treat security as a build feature, not a phase

Security “at the end” is where outsourcing engagements go to die: delayed launches, expensive rework, and finger-pointing.

Instead, define security requirements up front and implement them continuously:

- Threat modeling for critical flows (auth, payments, admin actions, data export)

- Dependency scanning and patch policy

- Secrets management (no secrets in code or CI logs)

- Secure defaults for authN/authZ and data access

For software supply-chain integrity, you can align build and dependency practices with SLSA concepts, even if you do not adopt the entire framework.

Operability is part of “done”

Many outsourced builds fail not because the code is “wrong,” but because the system cannot be safely operated.

Include operability in acceptance:

- Structured logs, metrics, and tracing for key user journeys

- SLOs (for example, availability and latency targets) and alerting

- Runbooks for common failure scenarios

- A tested deployment and rollback procedure

If reliability is critical, you can go deeper with Wolf-Tech’s reliability playbook: Backend Development Best Practices for Reliability.

Contract and commercial guardrails (practical, not legal advice)

Contracts do not create trust, but they prevent predictable disputes. The goal is to make the working relationship enforce the behaviors you need.

| Area | What to clarify | Why it matters |

|---|---|---|

| Deliverables and acceptance | Clear acceptance criteria including non-functional and operational requirements | Prevents “it works on my machine” disputes |

| IP ownership | Assignment language for code, docs, and designs, plus license boundaries | Reduces future legal and dependency risk |

| Security obligations | Secure SDLC expectations, vulnerability remediation timelines, breach notification | Makes security a shared responsibility |

| Subprocessors and staffing | Disclosure of subcontracting, key-person dependencies, replacement policy | Avoids surprise team changes and hidden third parties |

| Data handling | Access controls, environments, anonymization rules, retention | Prevents accidental data exposure |

| Exit and transition | Handover period, documentation deliverables, access transfer, escrow if needed | Ensures you can continue without disruption |

| Change control | How scope changes are proposed, estimated, and approved | Prevents cost blowups and resentment |

A practical tip: include a transition readiness test as a milestone, for example “your internal team can deploy and troubleshoot with vendor support only.” This forces knowledge transfer to happen continuously rather than in a frantic final week.

Preventing vendor lock-in without going full bureaucracy

“Lock-in” often happens because the vendor becomes the only team that knows how things work and the only team with the keys.

You can reduce this with a few simple rules:

- Your organization owns the source repositories, cloud accounts, and CI/CD identity (with least-privilege access for the vendor).

- Documentation is part of delivery (runbooks, architecture notes, onboarding steps).

- Use ADRs for material decisions so future engineers understand why choices were made.

- Rotate pairing sessions between vendor engineers and internal engineers, especially on deployment, on-call concerns, and key domain logic.

This is not about distrust. It is about designing a resilient operating model.

Early warning signals your outsourcing engagement is drifting

Most outsourcing failures are visible by week 3 to 6, but teams rationalize the signals.

Watch for:

- Demos that focus on UI progress but avoid end-to-end flows, real data, or production-like conditions

- “We will add tests later” or “we will harden later” as a repeated pattern

- Large PRs with long review cycles and unclear ownership

- Repeated rework because acceptance criteria are interpreted differently

- Unclear release readiness (no rollback plan, no monitoring, no incident process)

If you see these, do not jump straight to replacing the vendor. First, correct the system: tighten acceptance criteria, reduce batch size, enforce CI gates, and reset roles and decision rights.

A pragmatic 30-day kickstart plan for outsourcing

This is a simple way to start with control, not chaos:

| Time window | Focus | Concrete outputs |

|---|---|---|

| Week 1 | Alignment | Outcome brief, initial backlog, non-functional requirements, access model |

| Week 2 | Foundation | Repo setup, CI pipeline, coding standards, environment plan, initial ADRs |

| Week 3 | Vertical slice | One thin end-to-end flow in production-like conditions, basic monitoring |

| Week 4 | Scale decision | Review pilot results, adjust engagement model, lock next milestones |

If the team cannot produce a credible vertical slice with sane engineering hygiene in the first month, scaling the team usually scales the problem.

When to bring in a third party (even if you keep your vendor)

Sometimes the best move is not “fire the vendor,” but “add an independent checkpoint.” A short assessment can de-risk:

- Legacy code and modernization plans

- Architecture and scalability decisions

- CI/CD and release reliability

- Code quality and maintainability

- Security and compliance evidence

Wolf-Tech supports teams with full-stack development and code quality consulting, including legacy optimization and modernization. If you want a second opinion on an outsourcing setup (vendor selection, pilot design, architecture guardrails, or delivery system), you can start with a focused assessment via wolf-tech.io.