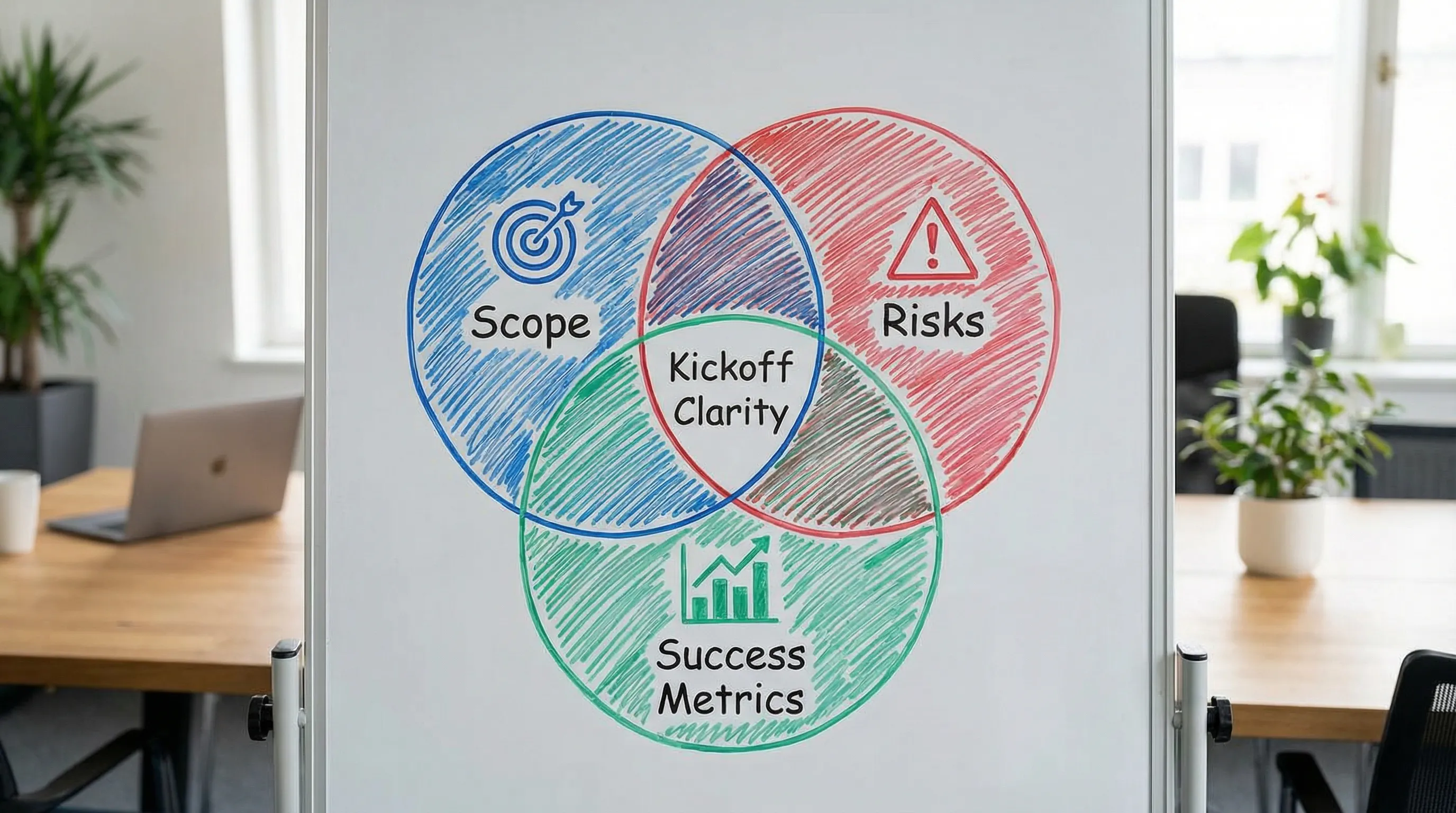

Software Project Kickoff: Scope, Risks, and Success Metrics

A software project kickoff is where you either buy speed with clarity, or you quietly buy rework with ambiguity.

Most “project failures” are not caused by a single bad sprint. They come from three avoidable problems that show up on day one:

- Scope that is not testable (everyone agrees in the meeting, nobody agrees two weeks later)

- Risks that are known but unnamed (so they never get mitigated)

- Success metrics that are implied (so progress becomes subjective)

This guide gives you a practical kickoff structure focused on scope, risks, and success metrics, with templates you can reuse for internal teams and vendor-led delivery.

What a software project kickoff is (and is not)

A kickoff is not a slide deck about “how we work,” and it is not a detailed requirements workshop.

A good kickoff is a decision-making session that produces artifacts the team will actually use in the first 2 to 6 weeks:

- A scope statement that can be validated against shipped work

- A risk register with owners and near-term mitigations

- A measurement plan (business outcomes, delivery health, and production outcomes)

- A shared operating rhythm (how decisions happen, what “done” means, how releases are handled)

If your project involves multiple stakeholders, external dependencies, regulated data, or legacy integration, the kickoff is your highest leverage meeting.

Scope: make it testable, not inspirational

The kickoff goal is to move from “we want X” to “we will ship Y by date Z, under constraints C, and we will know it worked when metric M moves.”

Start with outcomes, then constrain the slice

Ask for one measurable outcome per primary stakeholder. Examples:

- Reduce onboarding time from 20 minutes to 8 minutes

- Increase quote-to-cash throughput by 30 percent

- Replace a manual CSV process with an audited workflow

Then constrain scope with a thin vertical slice that reaches production. If you want a deeper playbook for this approach, Wolf-Tech’s guide on a practical delivery process is a good companion: Software Building: A Practical Process for Busy Teams.

Define boundaries explicitly (in-scope, out-of-scope, and “later”)

Scope creep often comes from category errors. Someone asks for a “small change” that is actually:

- A new role and permission model

- A new integration contract

- A new audit or reporting requirement

- A new performance expectation

At kickoff, document what is out of scope with the same seriousness as what is in scope. “Later” items should be captured, but not promised.

Capture non-functional requirements early

Non-functional requirements (NFRs) are scope. They change architecture, cost, and timelines.

Typical NFRs to clarify in kickoff:

- Availability and reliability targets (SLOs)

- Performance budgets (latency, concurrency)

- Security and compliance constraints (PII, PCI, SOC 2 expectations)

- Data retention and auditability

- Deployment frequency expectations

Wolf-Tech covers this “measurable NFRs first” mindset in multiple articles, including Developing Software Solutions That Actually Scale.

A scope statement template you can reuse

Use a one-page scope statement that is short enough to be read weekly.

| Scope element | What “good” looks like | Example prompt for kickoff |

|---|---|---|

| Outcome | Measurable change, not a feature list | “What changes for users or the business?” |

| Primary users | Named personas and their jobs | “Who is the primary user on day one?” |

| In-scope capabilities | Verbs, not modules | “Users can submit, approve, and export…” |

| Out-of-scope | Explicit exclusions | “No custom reporting in phase 1” |

| Constraints | Time, compliance, tech, vendors | “Must use existing IdP and audit trail” |

| Dependencies | Systems, teams, vendors | “ERP integration contract not yet confirmed” |

| Acceptance signals | Evidence, not opinions | “Demo + automated tests + prod telemetry” |

If you already have an MVP idea, you can cross-check it against a more detailed readiness list like Building Apps: MVP Checklist for Faster Launches.

Risks: name them early, then buy them down

A kickoff should include a short pre-mortem: “It’s 90 days from now and this project disappointed everyone, what happened?”

Then translate the answers into a risk register with owners.

Risk categories that matter in real projects

Most risks fall into a few buckets. Labeling the bucket helps you pick the right mitigation.

| Risk category | Typical early signal | Practical mitigation (kickoff-level) |

|---|---|---|

| Product risk | Stakeholders disagree on “done” | Write acceptance signals, define a thin slice, set a change control rule |

| Technical risk | Unknown legacy behavior | Run a vertical slice against real environments, prioritize seams and contract tests |

| Integration risk | External API unclear or unstable | Request sandbox access, define API contracts, create mocks and failure-mode tests |

| Delivery risk | Part-time SMEs, unclear decision rights | Assign DRIs, set a weekly decision forum, define escalation paths |

| Security/compliance risk | Data classification unknown | Classify data, choose baseline controls, align on evidence needed for audits |

| Operational risk | “We’ll add monitoring later” | Define SLIs/SLOs, log/trace requirements, and rollback strategy before launch |

If your project has meaningful delivery-system risk (slow merges, manual deployments, unclear release controls), a kickoff is the right time to set expectations for CI/CD and release safety. For reference, see CI CD Technology: Build, Test, Deploy Faster.

Use “risk burn-down” work, not just documentation

A risk register is only useful if it drives work in the first iterations. In practice, the highest ROI mitigations tend to be:

- A production-grade thin slice (real auth, real data shape, real deployment path)

- Contract tests for the most failure-prone integrations

- Observability baseline (logs, metrics, tracing) before feature breadth

- Clear rollout controls (feature flags, canary or phased release, rollback plan)

For operational safety patterns that reduce blast radius, the Google SRE book is a credible reference, especially around SLO thinking.

Success metrics: agree on leading indicators and lagging outcomes

Projects drift when “progress” means different things to different people. Your kickoff should align on a measurement stack that answers three questions:

- Are we delivering effectively?

- Is the product working in production?

- Is the business outcome improving?

Delivery metrics (engineering health)

Delivery metrics are not the goal, but they predict whether you can adapt.

The most widely used set is DORA, popularized by the research behind Accelerate and continued through Google Cloud’s DORA research program. These focus on speed and stability together: DORA research.

Use DORA-style metrics in kickoff when you need to make delivery constraints explicit:

- Deployment frequency

- Lead time for changes

- Change failure rate

- Time to restore service

Production metrics (reliability and performance)

Define at least one service level objective (SLO) per user-critical flow. Keep it simple.

Examples:

- “Checkout API: 99.9 percent success rate weekly, p95 latency under 400 ms”

- “Report generation: 95 percent completed under 2 minutes”

Tie these to an error budget concept if you can, even informally. It creates a rational way to decide when to pause feature work to fix reliability.

Business metrics (value)

Pick one primary business metric per outcome, plus one or two supporting metrics. Avoid vanity metrics.

Examples:

- Primary: activation rate

- Supporting: onboarding completion time, support ticket rate for onboarding

A simple success metrics matrix

| Metric type | Example metric | Owner | How you measure it | Review cadence |

|---|---|---|---|---|

| Business outcome | Onboarding completion rate | Product | Event analytics with a defined funnel | Weekly |

| User experience | p95 page load for key route | Engineering | RUM + Core Web Vitals dashboard | Weekly |

| Reliability | Success rate for critical API | Engineering | SLI from metrics, alerting thresholds | Weekly |

| Delivery health | Lead time for changes | Eng manager | CI/CD timestamps, PR cycle time | Bi-weekly |

| Quality | Defect escape rate | QA/Engineering | Incidents and bug intake tagged to releases | Monthly |

A key kickoff decision is where these metrics live, who can access them, and what constitutes a “real baseline” (usually 1 to 2 weeks of production telemetry).

A practical kickoff agenda that fits in one session

For many teams, a 90 to 180 minute kickoff is enough, as long as you come prepared.

Pre-work (asynchronous)

Ask for these before the meeting:

- A one-paragraph problem statement and target users

- Known constraints (compliance, dates, vendors)

- Existing architecture context (systems involved, current pain points)

- Any non-negotiable technology constraints

If you are unsure what artifacts matter, Wolf-Tech’s architecture review checklist is a useful guide to “bring evidence, not opinions”: What a Tech Expert Reviews in Your Architecture.

In-meeting flow

- Outcome alignment: what changes, for whom, and how we will measure it

- Scope definition: thin slice, in-scope and out-of-scope, dependencies

- Risk pre-mortem: top risks, early signals, and owners

- Success metrics: business, production, and delivery metrics, plus baseline plan

- Operating model: decision rights, ceremonies, Definition of Done, release strategy

Post-kickoff follow-up (within 48 hours)

Send one short kickoff memo with decisions and open questions. If you do nothing else, do this. It prevents “silent disagreement.”

Kickoff deliverables that prevent rework

Kickoff artifacts should be lightweight but operational.

| Deliverable | Why it matters | Minimum content |

|---|---|---|

| Scope statement (one page) | Prevents scope drift | Outcome, users, in/out, constraints, dependencies |

| Risk register | Makes uncertainty manageable | Risk, category, owner, next mitigation step |

| Measurement plan | Makes progress objective | Metrics list, data sources, dashboard links, cadence |

| Definition of Done | Prevents “almost done” loops | Quality gates, security checks, deployability, observability |

| Decision log | Keeps architecture and product choices consistent | ADR-style notes, trade-offs, chosen option |

A strong Definition of Done typically includes:

- Code reviewed and merged with agreed quality gates

- Automated tests at the right level for the change

- Security baseline checks for dependencies and secrets

- Telemetry added for the new flow (logs and metrics at minimum)

- Deployable through the normal pipeline, with rollback approach defined

If you want to operationalize quality without chasing vanity targets, Wolf-Tech’s metric-focused approach can help: Code Quality Metrics That Matter.

Common kickoff failure modes (and how to avoid them)

These are patterns that repeatedly show up in real software projects.

The “scope is a feature list” trap

If scope is written as modules, teams argue later about behavior and edge cases. Fix it by defining scope as capabilities plus acceptance signals.

The “integration later” trap

Integrations are rarely “later.” They determine data shape, error handling, and operational behavior. Bring the highest-risk integration into the thin slice.

The “metrics after launch” trap

If measurement is deferred, the project can only be judged by stakeholder sentiment. Instrumentation is part of scope.

The “no decision rights” trap

If nobody knows who decides, decisions get made in Slack, then re-litigated in reviews. Assign a clear DRI per domain (product, architecture, security, release).

The “we don’t talk about constraints” trap

Constraints are not negativity, they are what make a plan credible. Capture them explicitly, especially around compliance, timelines, and existing platform boundaries.

When it’s worth bringing in an outside expert

A kickoff is especially high-leverage to run with an experienced partner when:

- You have a legacy codebase and unclear change risk

- You need to validate a stack and architecture quickly

- You have strict security, audit, or uptime expectations

- You need a thin slice in production fast, without compromising operability

Wolf-Tech specializes in full-stack development and technical consulting across delivery, modernization, cloud/DevOps, and architecture strategy. If you want a kickoff that produces a realistic plan, measurable success metrics, and an early risk burn-down path, explore Wolf-Tech’s approach at Wolf-Tech and use the articles above to align stakeholders before the first sprint.