What a Tech Expert Reviews in Your Architecture

Most architecture problems are not “bad technology.” They are mismatches, between business goals and system boundaries, between reliability targets and delivery practices, between how teams work and what the architecture demands.

An experienced tech expert reviews your architecture to surface those mismatches early, while changes are still cheap. The goal is not a prettier diagram, it is a safer path to ship, scale, and evolve.

Below is what a tech expert typically reviews, what evidence they look for, and the red flags that predict slow delivery and operational risk.

1) The outcomes and constraints (before any diagrams)

A credible architecture review starts with intent. Without it, “best practices” become noise.

A tech expert will ask:

- What are the business outcomes? (conversion, retention, throughput, cycle time, compliance, new market)

- What is the time horizon? (MVP in 8 weeks vs scale in 18 months)

- What are the constraints? (regulated data, existing vendor contracts, legacy platforms, skills, budget ceilings)

- What are the non-functional requirements (NFRs)? (p95 latency, uptime, RTO/RPO, data freshness, auditability)

If you cannot state measurable NFRs, a reviewer will usually help you define them because architecture decisions are trade-offs. “Fast” and “secure” only become actionable when you attach targets.

If you want a deeper framework for aligning architecture to organizational change, Wolf-Tech’s guide on digital transformation in software development provides a practical set of pillars and metrics.

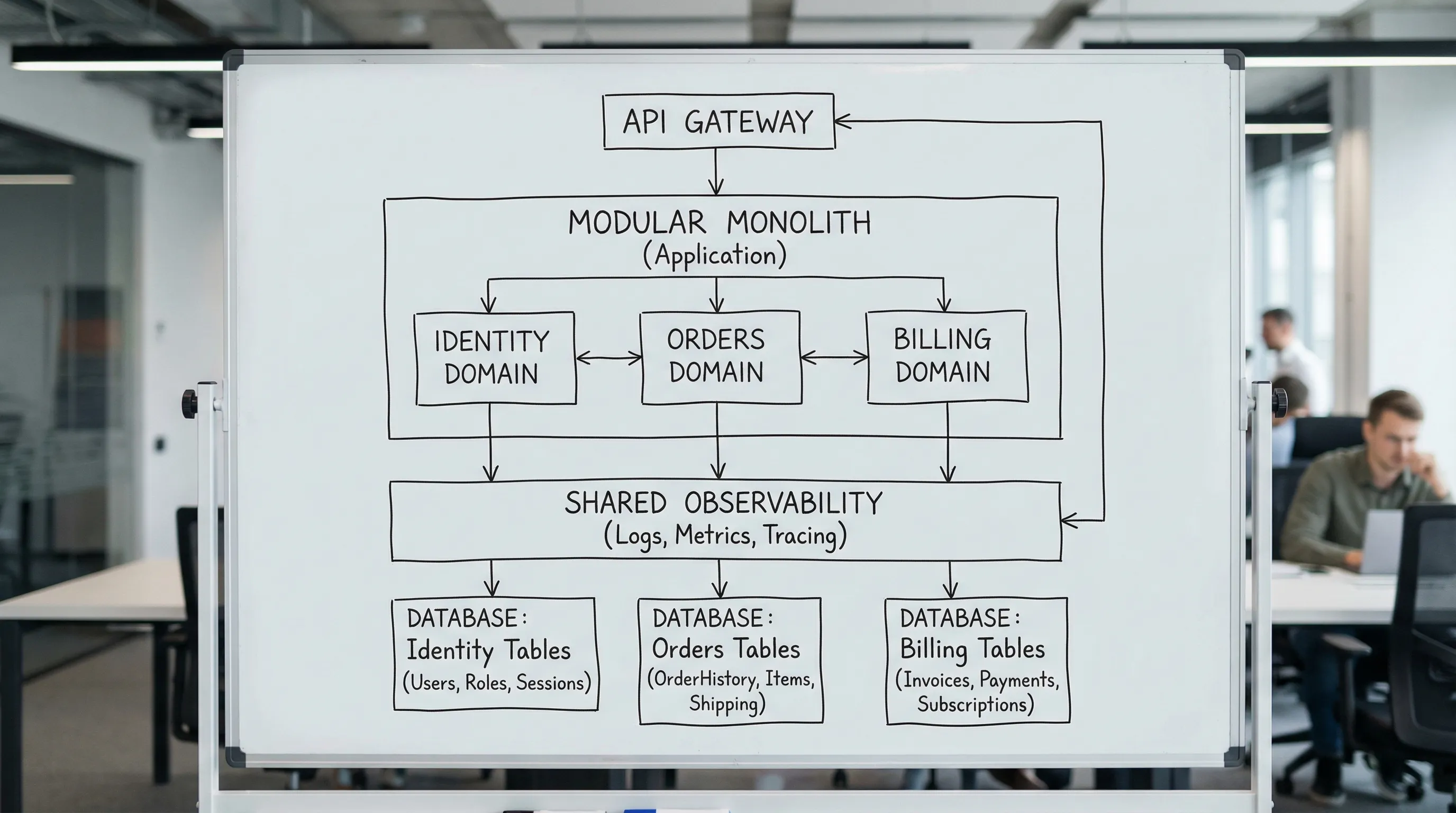

2) System boundaries and modularity (where complexity lives)

Most scalability failures happen at boundaries, not inside code.

A tech expert reviews:

Domain boundaries and ownership

They look for clear ownership of responsibilities and data. Whether you run a modular monolith, services, or serverless functions, the key questions are:

- Are business capabilities separated cleanly (billing, identity, catalog, scheduling)?

- Is there a clear “source of truth” for each entity?

- Are boundaries aligned with teams and deployment reality?

Coupling and change blast radius

Reviewers hunt for accidental coupling that makes every change risky:

- Shared databases across “services”

- A single “god” module that everything imports

- Tight synchronous call chains across many components

A strong architecture minimizes the number of components you must touch to ship a feature safely.

3) Data architecture and lifecycle (the part you cannot refactor quickly)

Data shapes your system’s future. A tech expert will review the data model and operational lifecycle, not just schemas.

Key checks include:

Data ownership and integrity

- Primary keys, constraints, and referential integrity where appropriate

- Rules enforced in the right place (database constraints vs application-only)

- “Write paths” that are auditable and deterministic

Migration safety

- How schema changes are deployed (expand and contract patterns)

- Backfill strategies, data validation, and rollback plans

- Whether you can deploy without downtime

Consistency model

A reviewer clarifies what must be strongly consistent vs what can be eventually consistent. A common failure is building distributed complexity for workflows that did not need it.

4) Integration and API design (how your system talks)

Architecture reviews often find that API and integration decisions are the real bottleneck, especially once multiple teams and external partners are involved.

A tech expert reviews:

- API boundaries and versioning approach

- Idempotency for writes (critical for retries and reliability)

- Error contracts and observability (correlation IDs, structured errors)

- Eventing patterns (outbox pattern, DLQs if messaging exists)

- Contract testing and backwards compatibility

If GraphQL is in play, reviewers also focus on query cost controls, caching implications, and authorization at field level. (Wolf-Tech’s practical overview of GraphQL APIs goes deep on those pitfalls.)

5) Delivery system and change safety (can you ship without fear?)

A tech expert will treat architecture and delivery as inseparable. If your delivery system is brittle, even a great architecture will feel slow.

They typically review:

CI/CD maturity

- Build repeatability, environment parity, artifact promotion

- Automated tests that run fast enough to be used (unit, contract, smoke)

- Deployment strategy (blue/green, canary, feature flags) to reduce blast radius

Metrics that predict outcomes

A reviewer will often ask for DORA-style signals: deployment frequency, lead time, change failure rate, MTTR. (See the research program behind these metrics via DORA.)

For practical implementation details, Wolf-Tech’s post on CI/CD technology lays out a modern baseline and a staged adoption plan.

6) Reliability and operability (what happens at 2 a.m.)

In 2026, “works on my machine” is not a milestone. A tech expert reviews whether the system can be operated predictably.

They look for:

- SLIs/SLOs (even simple ones) and alerting tied to user impact

- Logs, metrics, and tracing that let you debug without guessing

- Runbooks for top failure modes and on-call readiness

- Timeouts, retries, backpressure, circuit breakers where relevant

- Resilience patterns in data and messaging (dead-letter handling, replays)

Reliability reviews often overlap with backend design. Wolf-Tech’s backend development best practices for reliability is a strong companion if your architecture is struggling with incidents or instability.

7) Security posture and supply chain risk

A modern architecture review includes security by design. The most common gaps are not exotic hacks, they are missing fundamentals.

A tech expert reviews:

- Authentication and authorization model (including service-to-service)

- Secrets management and least privilege access

- Data classification and encryption needs

- Secure defaults in APIs (rate limiting, input validation)

- Dependency and build integrity practices (SBOMs, provenance, patch hygiene)

Two credible references many reviewers align with are:

- NIST Secure Software Development Framework (SSDF)

- OWASP Application Security Verification Standard (ASVS)

A key sign of maturity is evidence: threat models for critical flows, security checks in CI, and a clear process for vulnerability remediation.

8) Performance and scalability (measured, not assumed)

A tech expert focuses on performance the same way they focus on correctness: via measurement.

They review:

- Baseline metrics (p95/p99 latency, throughput, error rates)

- Capacity assumptions and where queues form

- Caching strategy and invalidation discipline

- N+1 patterns and query plans in the data layer

- Frontend performance budgets (Core Web Vitals if web)

If your product is a web app, a reviewer will often connect performance to architectural choices (rendering strategy, caching boundaries, where data is fetched). Wolf-Tech’s Next.js performance tuning guide is a good example of this measurement-first approach.

9) Cost and sustainability (architecture that you can afford to run)

Cost is an architecture property. A tech expert reviews whether the system has a path to predictable spend.

They check:

- Cost visibility by environment and major workload

- Overprovisioning patterns (always-on compute, unused replicas)

- Data growth drivers (logs, events, analytics) and retention controls

- Whether scale-up events are financially survivable

This is rarely about “cheapest cloud.” It is about designing for cost-aware operations.

10) Team fit and governance (architecture is a socio-technical system)

A tech expert will also ask: can your team realistically operate this architecture?

They review:

- Team boundaries vs code boundaries (do they align?)

- Ownership model (who is on call for what?)

- Documentation quality (ADRs, onboarding guides, runbooks)

- Platform “golden paths” and guardrails, if you have multiple teams

If you have significant legacy surface area, reviewers also look for practical modernization options that reduce risk. Wolf-Tech’s guide on modernizing legacy systems without disrupting business is aligned with the incremental approach most experts recommend.

An architecture review scorecard (what evidence to bring)

A useful review is evidence-driven. Here is a simple scorecard a tech expert can use to structure findings.

| Review area | What gets reviewed | Evidence to request | Common red flag |

|---|---|---|---|

| Outcomes and NFRs | Measurable targets, constraints, priorities | NFR doc, SLO draft, risk register | “We’ll make it scalable later” |

| Boundaries and coupling | Domain seams, dependencies, ownership | Context diagram, module map, dependency graph | Shared DB across “services” |

| Data architecture | Model, migrations, consistency | ERD, migration history, backfill plan | Manual hotfixes to production data |

| APIs and integration | Contracts, versioning, idempotency | OpenAPI/GraphQL schema, error contract, contract tests | Breaking changes without versioning |

| Delivery system | CI/CD, tests, release safety | Pipeline runs, test strategy, deploy metrics | Deploys require heroics |

| Operability | Observability, runbooks, SLOs | Dashboards, alert policy, incident reviews | No correlation IDs, no traces |

| Security | AuthZ, secrets, SDLC controls | Threat model, dependency scanning results | Secrets in configs, broad IAM |

| Performance | Bottlenecks, budgets, load profile | RUM/APM, load test results, query plans | “It’s slow sometimes” with no data |

| Cost | Spend drivers, growth projections | Cost reports, retention policies | Surprise bills, no ownership |

| Team fit | Ownership, docs, governance | Team topology, ADRs, onboarding checklist | Architecture no one can explain |

How to prepare for an expert architecture review (fast, high-signal)

You do not need perfect documentation. You need a small set of artifacts that show reality.

Bring:

- A current architecture diagram (even if messy) and a list of major components

- A sample user journey mapped end-to-end (request flow, data writes, async steps)

- Recent incidents or production issues (and what you tried)

- A snapshot of CI/CD (pipeline steps, test suite duration, deployment approach)

- Observability screenshots (dashboards, alerts) if they exist

- 2-3 “next quarter” initiatives that are currently risky

The faster a reviewer can connect business outcomes to technical bottlenecks, the more actionable the recommendations will be.

What you should expect as deliverables

A strong architecture review ends with clear priorities, not a long list of opinions.

Typical deliverables include:

- A short executive summary (risk, cost, and delivery impact)

- A prioritized backlog of changes (quick wins vs structural work)

- Recommended guardrails (architecture rules, interface standards, quality gates)

- A pragmatic migration path if modernization is needed

- Metrics to track whether the architecture is actually improving (delivery, reliability, cost)

If you want to complement architecture findings with engineering quality signals, Wolf-Tech’s post on code quality metrics that matter is a practical guide to turning “quality” into measurable levers.

Frequently Asked Questions

How long does an architecture review take? A focused review can take days to a couple of weeks depending on system size and the quality of existing evidence. The fastest reviews are scoped to specific outcomes and risks.

Do we need to be on a specific cloud or tech stack to get value? No. A tech expert reviews fundamentals (boundaries, data, delivery, operability, security) that apply across stacks, then tailors recommendations to your constraints.

Will an expert recommend microservices? Not automatically. Many reviews conclude that a modular monolith or a hybrid approach is safer until boundaries, delivery, and operability are mature.

What are the most common architecture red flags? Undefined NFRs, unclear data ownership, brittle CI/CD, lack of observability, and high coupling that makes changes risky.

Can an architecture review help with legacy modernization? Yes. A good review identifies fracture planes, migration sequencing, and safety mechanisms (tests, feature flags, incremental releases) so modernization does not become a big-bang rewrite.

Want a second opinion from a tech expert?

If you are planning a modernization, preparing to scale, or simply feeling architecture drag in delivery speed and reliability, Wolf-Tech can help you assess the current state and define a pragmatic path forward.

Explore Wolf-Tech’s full-stack development and consulting capabilities, or reach out to discuss an architecture review tailored to your system, constraints, and timeline.