Software Programmers: Hiring Signals That Predict Success

Hiring software programmers is one of the highest-leverage decisions a business can make, and one of the easiest places to get fooled by polished resumes, trendy buzzwords, or a strong performance in a single coding exercise. The goal is not to find someone who can “code”, it’s to find someone who can deliver valuable software safely, collaborate effectively, and keep systems healthy as they evolve.

This guide focuses on hiring signals that reliably predict success, plus practical ways to test those signals without turning your process into a month-long obstacle course.

What “success” looks like for software programmers

Before signals, define the outcome. In most teams, a successful software programmer consistently does four things:

- Delivers working increments of product value, not just “tasks completed”.

- Maintains change safety, meaning the team can ship without fear (tests, reviews, monitoring, rollbacks).

- Makes the system easier to work with over time, not harder (clear boundaries, readable code, sane architecture).

- Improves team throughput, via communication, documentation, and good technical judgment.

If you only screen for raw coding speed, you’ll hire people who can type quickly, but you may miss the people who actually make software organizations effective.

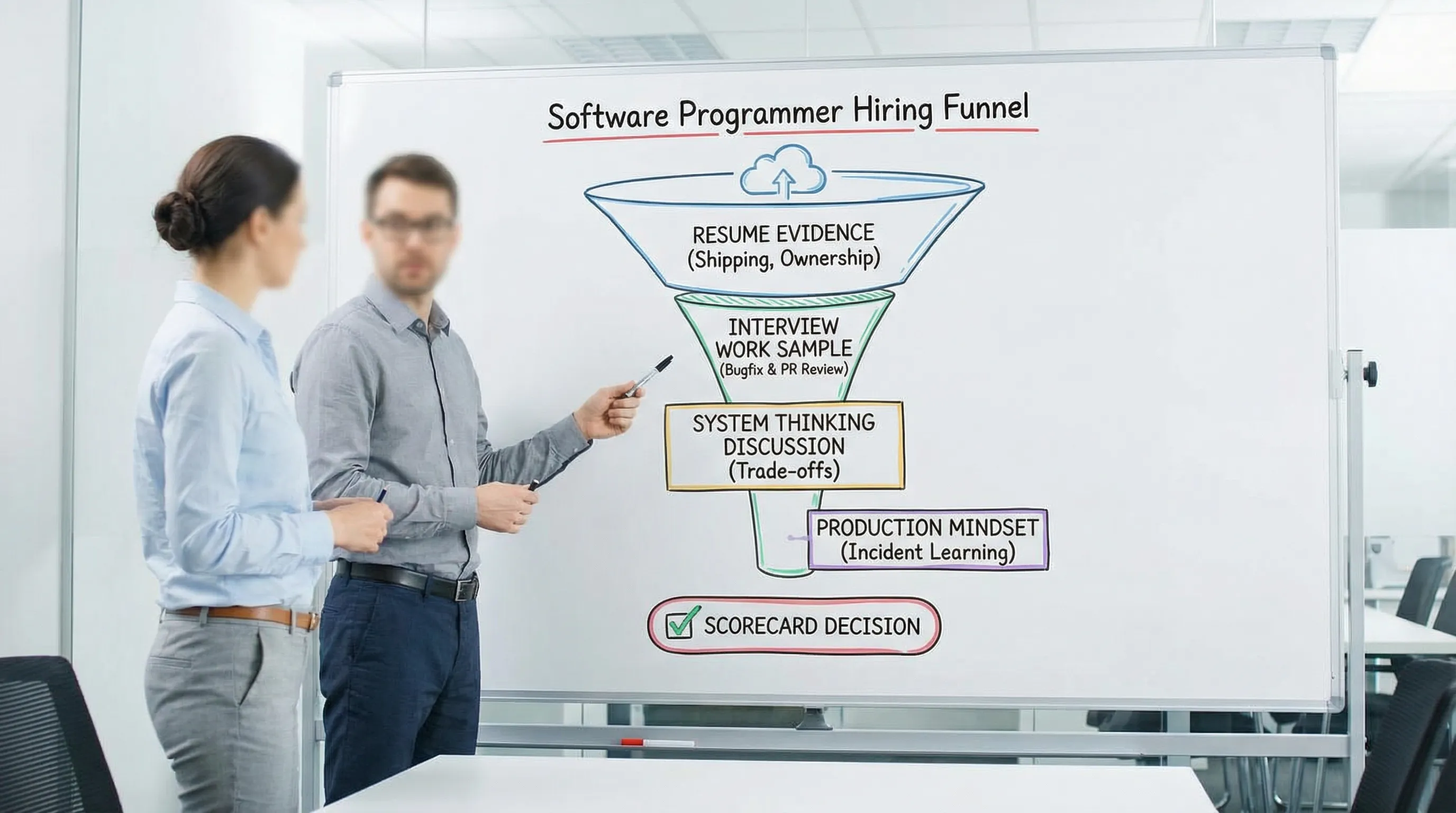

The most predictive hiring signals (and how to validate them)

Signal 1: Proof of shipping in real constraints

What it predicts: The ability to finish work, navigate ambiguity, and make trade-offs.

What to look for:

- Concrete stories about shipping: what changed, why it mattered, what broke, what they learned.

- Understanding of constraints: deadlines, legacy code, compliance, performance, limited staffing.

- Ownership language: “I drove…”, “I coordinated…”, “I monitored…”, not only “we did…”.

How to validate in an interview: Ask for one deep project walkthrough.

Good prompts:

- “Pick one feature you shipped that you’re proud of. What was the impact, and how did you measure it?”

- “What surprised you after release?”

- “What would you do differently if you had to build it again?”

This is also where delivery practices show up naturally: feature flags, canary releases, rollback readiness, release notes, and stakeholder communication.

Signal 2: Quality habits that scale beyond one person

What it predicts: Maintainability, reliability, and the ability to work in a team codebase.

Look for programmers who talk about quality as a system, not a personal preference:

- PR size discipline, meaningful reviews, and a clear Definition of Done

- Testing strategy choices (what to test, where, and why)

- Static analysis, formatting, and automation

- Refactoring as a continuous activity, not a one-time “cleanup sprint”

If you want a deeper view of what to measure once they join, Wolf-Tech has a companion guide on code quality metrics that matter.

How to validate: Use a small, realistic code exercise with discussion.

Instead of “invert a binary tree”, give them:

- A small service function with a bug and weak tests, ask them to fix it and improve confidence.

- A PR-style diff (even a fake one) and ask them to do a review.

You are testing judgment: what they change first, what they leave alone, and how they communicate risk.

Signal 3: Systems thinking (even in non-senior roles)

What it predicts: Better technical decisions, fewer accidental outages, and fewer “local optimizations” that hurt the product.

Systems thinking shows up when candidates can reason about:

- Data lifecycle (creation, validation, migrations, retention)

- API boundaries and versioning

- Failure modes (timeouts, retries, idempotency)

- Performance trade-offs (latency, caching, queueing)

You don’t need everyone to be an architect, but you do want people who can see beyond a single file.

How to validate: Give a small scenario and ask for trade-offs.

Example prompt:

“Users report the dashboard is slow at peak. You can’t rewrite the whole thing. What’s your first week of investigation and mitigation?”

Strong answers include measurement-first steps, practical narrowing, and staged fixes. If your organization cares heavily about reliability, you can align this with practices from backend development best practices for reliability.

Signal 4: Production mindset and operational empathy

What it predicts: Fewer incidents, faster recovery, and smoother on-call.

A production-minded programmer thinks about:

- Observability (logs, metrics, traces)

- Safe deploys and blast-radius reduction

- Incident learning (postmortems, follow-ups)

- Backward compatibility and migrations

How to validate: Ask about a real incident.

- “Tell me about an outage you were involved in. What was the root cause and the follow-up?”

- “How did you reduce the chance of recurrence?”

If they have never been near production, that’s not an automatic no (especially for junior roles), but they should show curiosity and responsibility, not avoidance.

Signal 5: Communication that reduces rework

What it predicts: Speed, alignment, and less “ping-pong” between product and engineering.

Look for:

- Clarifying questions before building

- Clear written thinking (tickets, PR descriptions, docs)

- Ability to explain trade-offs to non-engineers

How to validate: Use a short written prompt.

Give a one-paragraph spec and ask them to respond with:

- Assumptions

- Open questions

- A thin-slice plan

This closely mirrors how real work starts.

Signal 6: Learning agility and de-risking behavior

What it predicts: Adaptation to new stacks, better debugging, and faster growth.

The best programmers do not just “learn”, they de-risk. They run spikes, build thin vertical slices, and validate unknowns early.

This matches the modern delivery approach Wolf-Tech teaches in software building: a practical process for busy teams.

How to validate: Ask how they approach unfamiliar territory.

- “When you hit a problem you don’t understand, what’s your process?”

- “Tell me about a time you had to learn a system fast. What did you do first?”

Signal 7: Responsible use of AI tools (2026 reality)

What it predicts: Speed without recklessness.

In 2026, many strong programmers use AI assistants, but success depends on how they manage risk:

- They verify outputs with tests and reasoning.

- They understand security and licensing boundaries.

- They avoid pasting sensitive code or customer data into tools without approval.

How to validate: Ask directly.

- “How do you use AI tools in day-to-day development?”

- “What safeguards do you use to avoid subtle bugs or insecure patterns?”

A red flag is either extreme: “I never use it” (often unrealistic) or “I trust it completely”.

A practical scorecard: signals, tests, and red flags

Use a simple, repeatable scorecard so you don’t hire based on vibes. Structured interviews consistently outperform unstructured ones in hiring research, and work-sample style assessments are particularly effective (a classic summary is Schmidt and Hunter’s meta-analysis in Psychological Bulletin, 1998).

| Hiring signal | What it predicts | Best way to test | Common red flags |

|---|---|---|---|

| Shipped meaningful work | Delivery ownership and judgment | Deep project walkthrough | Only talks about tasks, no impact, no learning |

| Quality habits | Maintainability and team throughput | PR review exercise, small bugfix + tests | Treats tests as optional, dismisses reviews |

| Systems thinking | Better design decisions, fewer regressions | Scenario trade-off discussion | Jumps to rewrites, ignores constraints |

| Production mindset | Reliability, operability | Incident story, observability discussion | Blames others, no follow-up actions |

| Communication | Less rework, faster alignment | Written prompt, clarifying questions | Builds before understanding, vague answers |

| Learning agility | Faster ramp-up, adaptability | “Unfamiliar system” stories | No strategy, relies on heroics |

| Responsible AI use | Speed with governance | AI workflow and safeguards | Blind trust, unsafe data handling |

Designing an interview process that actually predicts performance

A strong process is not longer, it is more diagnostic.

Keep the loop small, structured, and consistent

A practical loop for many teams:

- One screen focused on role fit and communication

- One work-sample style technical session (bugfix, small feature, or PR review)

- One system and delivery discussion (production mindset, trade-offs)

- One culture and collaboration interview (cross-functional behaviors)

Each stage should have explicit pass criteria. If you need help defining delivery criteria and quality gates for the team they’ll join, your engineering baseline should include CI/CD expectations, review discipline, and release practices (see CI/CD technology: build, test, deploy faster).

Prefer job-relevant tasks over abstract puzzles

Abstract algorithm puzzles select for practice and pattern recall. Job-relevant work samples select for:

- Debugging

- Reading existing code

- Making safe changes

- Communicating trade-offs

That is what most software programmers do for a living.

Calibrate for level: junior, mid, senior

“Signals” look different by seniority.

- Junior programmers: prioritize learning agility, communication, and fundamentals (clean code, basic testing). Expect less production experience, but require responsibility and curiosity.

- Mid-level programmers: expect independent delivery, good PR habits, and real-world debugging stories.

- Senior programmers: expect system trade-offs, mentoring, operational leadership, and pragmatic architecture (not over-engineering).

If you’re hiring “full stack” roles, avoid unicorn expectations and instead define boundaries and ownership clearly. Wolf-Tech covers this in full stack development: what CTOs should know.

Hiring signals that are often misleading

Some common traps:

- “Years of experience” as a proxy for skill: Ten years can mean one year repeated ten times.

- Tool keyword density: A good engineer can learn tools, a poor engineer can list them.

- Overindexing on GitHub activity: Many great programmers work on private codebases.

- Charisma bias: Confident storytelling is valuable, but it must match evidence.

If a candidate is strong, they should be able to point to concrete decisions, measurable impact, and how they reduced risk.

When hiring is the wrong move (and partnering is faster)

Sometimes you are not hiring for a single seat, you’re trying to buy time:

- You need a production-ready MVP quickly.

- You need modernization without destabilizing business.

- You need senior-level architecture and delivery leadership for a limited window.

In those cases, partnering with a proven delivery team can be more effective than trying to hire under pressure. If you go that route, use an evidence-based vendor evaluation, Wolf-Tech’s guide on how to vet custom software development companies is a practical starting point.

Frequently Asked Questions

What is the best predictor of success when hiring software programmers? The strongest practical predictor is a combination of structured interviewing and job-relevant work samples, plus evidence they have shipped and supported real software under constraints.

Should we use take-home coding tests? Take-homes can work if they are small (1 to 2 hours), realistic, and assessed with clear criteria. Many teams get better signal from a collaborative work sample (bugfix or PR review) that mirrors the job.

How do you evaluate a programmer’s code quality in an interview? Use a PR review exercise or a small change request in an existing code snippet, then ask them to explain trade-offs, tests, and risk. This reveals habits more reliably than greenfield coding.

How do we assess production mindset if the candidate hasn’t been on-call? Ask how they would instrument and release changes safely, how they would investigate incidents, and what they think “done” means. You’re looking for responsibility and system awareness.

What are red flags that predict poor performance? Consistent blame-shifting, inability to discuss trade-offs, dismissing testing and reviews, overconfidence without evidence, and a pattern of proposing big rewrites instead of incremental risk reduction.

Need help raising the bar for engineering hiring and delivery?

If you want to improve how your team evaluates software programmers, or you need senior engineering support to deliver and modernize safely, Wolf-Tech can help with full-stack development, code quality consulting, legacy code optimization, and tech strategy.

Explore Wolf-Tech at wolf-tech.io or start with the most relevant resources: