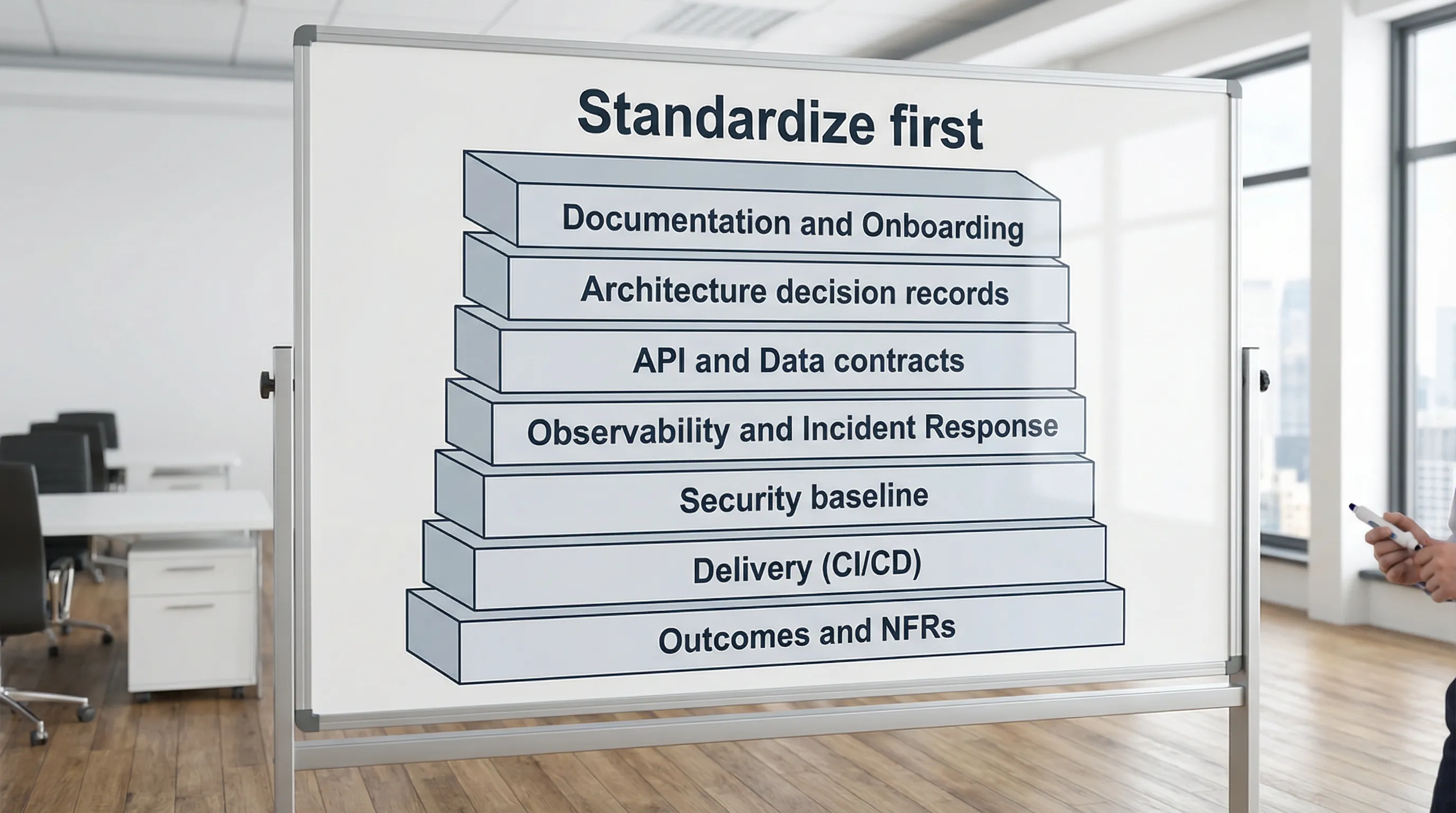

Software Development Technology: What to Standardize First

Standardization gets a bad reputation because it sounds like bureaucracy. In practice, the right standards do the opposite: they remove avoidable decisions, reduce production risk, and make it easier to scale a product and an engineering org without slowing down.

The mistake most teams make is standardizing the wrong things first (tooling preferences, “approved” frameworks, or a single architecture style). If you want software development technology to scale with your business, standardize the pieces that create the highest coordination cost and the biggest reliability and security risks.

Below is a pragmatic order of operations you can use whether you are a startup moving from 5 to 25 engineers, or an established business modernizing multiple legacy systems.

What “standardize” should mean (and what it should not)

Standardize means agreeing on a small set of defaults, guardrails, and proofs that keep delivery safe and repeatable across teams.

It does not mean forcing everyone onto the same framework or banning experimentation. A good rule is:

- Standardize what affects production safety, cross-team collaboration, and compliance.

- Allow variation at the edges (UI patterns, internal libraries, developer experience), as long as it still passes your standards.

If you want a quick mental model, standards should mostly be about how you ship and operate, not just what you build with.

1) Standardize outcomes and non-functional requirements (NFRs) first

Before picking or consolidating tools, standardize what “good” means. This is the fastest way to stop architecture debates from becoming religious arguments.

Start with measurable non-functional requirements for the user and the business: reliability, latency, data correctness, privacy, and change safety. Tie them to a product outcome (for example, “checkout conversion” or “support ticket deflection”), then define the engineering constraints that protect that outcome.

This aligns with how reliability is operationalized through SLOs and error budgets (Google’s SRE approach is the canonical reference: the SRE book is still worth bookmarking).

A lightweight baseline can look like this:

| NFR area | What to standardize | Example “good” definition (adapt to your domain) | Proof you require |

|---|---|---|---|

| Reliability | SLOs, SLIs, error budgets | Availability SLO for critical journeys | Dashboard + alerting in place |

| Performance | Performance budgets | p95 latency targets for key APIs | Load test and/or RUM baseline |

| Security | Minimum controls | MFA, least privilege, secrets handling | Security checklist passed in PR/CI |

| Change safety | Release constraints | Reversible deploys, safe rollbacks | Release playbook and evidence |

| Data | Data lifecycle rules | Retention, audit, backups, migrations | Backup restore test, migration plan |

If you already have Wolf-Tech’s “thin vertical slice” mindset in your org, this becomes much easier because you can validate these constraints early. Related reading: Developing Software Solutions That Actually Scale.

2) Standardize the delivery workflow (repo conventions and Definition of Done)

Once outcomes and constraints are clear, standardize how work moves from idea to production. This is a high-leverage form of standardization because it reduces friction across engineers, teams, and external partners.

Focus on interfaces between humans, not just systems:

- A shared Definition of Done (security checks passed, tests green, instrumentation present, rollback plan known)

- PR expectations (size limits, review SLA, required checks)

- Branching strategy default (many teams adopt trunk-based development, but choose what matches your release model)

- How you write and store decisions (ADRs), and where teams can find them

If you want a practical process template, Wolf-Tech’s guide on lightweight governance is relevant: Software Building: A Practical Process for Busy Teams.

3) Standardize CI/CD and release mechanics (the real “software development technology” backbone)

If you only standardize one technical area early, make it your delivery system. A consistent CI/CD baseline is how you turn engineering into a repeatable capability instead of a heroic effort.

Standardize the minimum pipeline building blocks:

- Build reproducibility (lockfiles, pinned versions where appropriate)

- Tests as gates (unit, integration, contract checks depending on system shape)

- Artifact creation and provenance (what you deploy is what you built)

- Environment strategy (dev, preview, staging, prod) and promotion rules

- Infrastructure as Code baseline and review expectations

- Deployment patterns that reduce blast radius (blue/green, canary, feature flags)

This is also where teams start measuring delivery performance with DORA-style metrics (lead time, deployment frequency, change failure rate, MTTR). The widely cited source is the DORA research summarized in Accelerate.

Wolf-Tech has a dedicated deep dive you can align with: CI CD Technology: Build, Test, Deploy Faster.

Key principle: standardize your pipeline capabilities, not necessarily your CI vendor.

4) Standardize a security baseline and supply chain controls

Security is another area where inconsistency becomes expensive, especially as you add teams, vendors, and more dependencies.

A practical way to avoid chaos is to define a security baseline that every repo and service must meet, regardless of language or framework. You can map it to recognized guidance like the NIST Secure Software Development Framework (SSDF) and common web risks via OWASP.

Keep the baseline short and enforceable:

| Control area | Standardize | How to enforce |

|---|---|---|

| Identity and access | MFA, least privilege, service accounts | Central IdP policies + IaC review |

| Secrets | No secrets in repos, rotation expectations | Secret scanning + vault/KMS usage |

| Dependencies | Update policy, vulnerability SLAs | SCA in CI + monthly patch cadence |

| Code scanning | Minimum SAST rules | CI gate on high severity |

| Runtime protections | Secure headers, TLS, logging | App templates + platform defaults |

The goal is not “perfect security.” The goal is consistent minimum safety and fast remediation.

5) Standardize observability and incident response (because production is the product)

Teams often delay observability standardization because it feels operational, not “product.” In reality, it is what prevents small failures from becoming expensive outages.

Standardize:

- Logging conventions (structured logs, correlation IDs)

- Metrics naming and cardinality rules

- Tracing defaults and sampling strategy

- Alert quality standards (alerts tied to user impact, not noise)

- A minimal runbook template (what breaks, how to roll back, who owns it)

This pairs naturally with reliability engineering practices like timeouts, retries, idempotency, and circuit breakers. See: Backend Development Best Practices for Reliability.

Also, do not forget the human loop: a consistent incident process (roles, comms, postmortems, follow-ups) is a form of standardization that preserves trust.

6) Standardize API and data contracts before you standardize architecture

Many organizations try to standardize architecture too early (“we are microservices now” or “everything is serverless”). That often increases variability, because different teams implement the same concepts in different ways.

A better early standard is contracts:

- API conventions (versioning, pagination, error shapes, idempotency keys)

- Authentication and authorization patterns

- Schema ownership rules

- Migration practices (forward-only, expand and contract, backfills)

- Integration testing expectations (contract tests for key dependencies)

If you use GraphQL, contract thinking is even more central because schema design and authorization are part of your core safety model. Wolf-Tech’s deep dive: GraphQL APIs: Benefits, Pitfalls, and Use Cases.

Why this comes early: contracts reduce coupling between teams, and coupling is what makes scaling slow.

7) Standardize architecture decisions through guardrails and ADRs (not one “blessed architecture”)

Architecture should be constrained by your NFRs and by your delivery and operational realities. This is why “standardize architecture” is usually the wrong phrasing.

Instead, standardize:

- A small set of “default shapes” (for example, modular monolith by default, with clear criteria for splitting)

- Architecture review triggers (new critical system, major data migration, compliance boundary)

- ADR format and location

- Evidence required for major shifts (thin vertical slice, load test, cost model)

If you want a concrete checklist of what an expert looks at, point teams here: What a Tech Expert Reviews in Your Architecture.

8) Standardize documentation and onboarding last (but make it real)

Documentation is where many standards go to die. The fix is to standardize docs that are operationally necessary and keep them close to the code.

A minimal, high-signal standard set:

READMEthat gets a developer to a working local environment- “How to deploy” and “How to roll back” notes

- Runbook links next to alert definitions

- A short “service facts” section (owner, dependencies, data stores, on-call)

This is also where legacy modernization benefits: teams can gradually document fracture planes and seams as they refactor. Relevant resource: Taming Legacy Code: Strategies That Actually Work.

A simple “standardize first” scorecard

If you are deciding what to standardize first in a specific environment, use a quick scorecard. Prioritize items that are high-risk and high-coordination.

| Candidate standard | Cross-team impact | Production risk reduction | Time to roll out | Standardize now? |

|---|---|---|---|---|

| Definition of Done | High | Medium | Low | Yes |

| CI test gates | High | High | Medium | Yes |

| Observability baseline | Medium | High | Medium | Yes |

| “One frontend framework” | Medium | Low | High | Usually no |

| “Microservices everywhere” | High | Unclear | High | No |

| API error conventions | High | Medium | Low | Yes |

This keeps you honest about what is actually driving cost and risk.

30 to 60 to 90 days: an adoption plan that avoids churn

Standardization fails when it is a big bang rewrite. It succeeds when it is incremental, measurable, and enforced through automation.

| Timeline | Focus | Deliverables | How you know it works |

|---|---|---|---|

| Days 1 to 30 | Outcomes, NFRs, workflow | NFR one-pager, Definition of Done, ADR template | Fewer “what does done mean?” debates |

| Days 31 to 60 | CI/CD baseline | Required CI checks, artifact build, preview env default | Shorter lead time, fewer broken builds |

| Days 61 to 90 | Security + observability | Security baseline checklist, logging/metrics/tracing defaults, runbooks | Faster MTTR, fewer sev-1 surprises |

After day 90, consider platform work, deeper legacy modernization, and architecture evolution based on evidence.

Common mistakes to avoid

Standardizing tools before behaviors. If the team’s Definition of Done and release habits are inconsistent, switching tools just changes the UI of the problem.

Enforcing standards without providing paved roads. If you require tracing but do not provide libraries, examples, or templates, teams will cargo-cult it.

One-size-fits-all for risk. A marketing site and a payments system should not have identical rigor, but they should share a baseline.

No owner, no lifecycle. Standards need a maintainer, a review cadence, and a deprecation path.

Frequently Asked Questions

What should we standardize first in software development technology? Start with measurable NFRs and delivery safety: Definition of Done, CI/CD quality gates, security baseline, and observability. These reduce risk and coordination cost fastest.

Should we standardize the tech stack across all teams? Not immediately. Standardize capabilities (delivery, security, observability, contracts) first. Then consolidate stacks where it clearly reduces cost or hiring friction.

How do we enforce standards without slowing teams down? Automate enforcement in CI/CD (required checks, templates, policy as code) and provide paved-road defaults. Avoid manual review boards as the primary gate.

When is it worth standardizing architecture? When you have clear evidence that variability is causing production risk, delivery drag, or high integration costs. Use guardrails and ADRs rather than mandating one architecture.

How does standardization help with legacy systems? It creates safe seams: consistent CI checks, contract tests, observability, and release patterns make incremental refactoring and modernization less risky.

Need a pragmatic standardization plan for your team?

If you are trying to scale delivery, improve reliability, or modernize legacy code without disrupting the business, Wolf-Tech can help you define the right standards and implement them incrementally.

Explore how Wolf-Tech approaches delivery and quality defaults in Custom Software Services: What You Should Get by Default, or get in touch via Wolf-Tech to discuss a focused architecture and delivery assessment.